With the advent of artificial intelligence, a new view of the photo has been defined, which is different from our previous views, and we may need to revise our previous understanding. After the introduction of the stunning capabilities of artificial intelligence in the photography of the new series of Google Pixel phones, it is more serious than ever that in the age of artificial intelligence, how much can you trust photos and images?

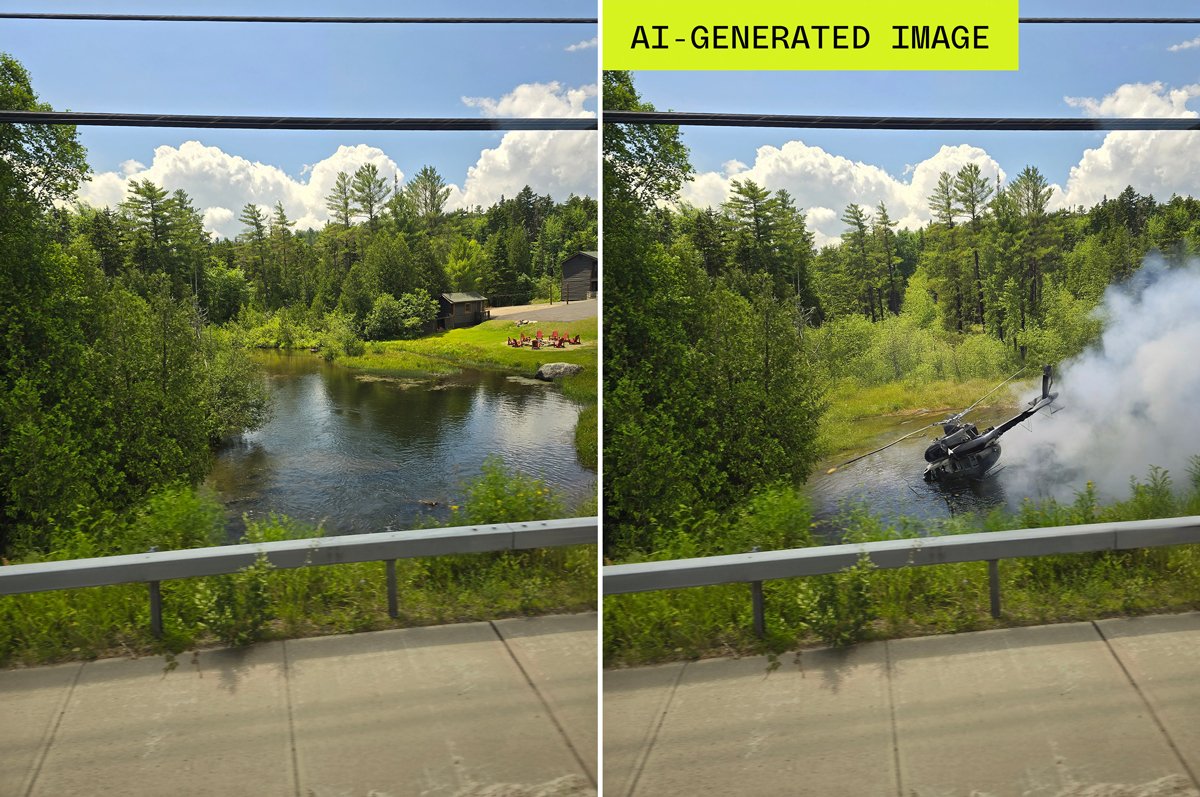

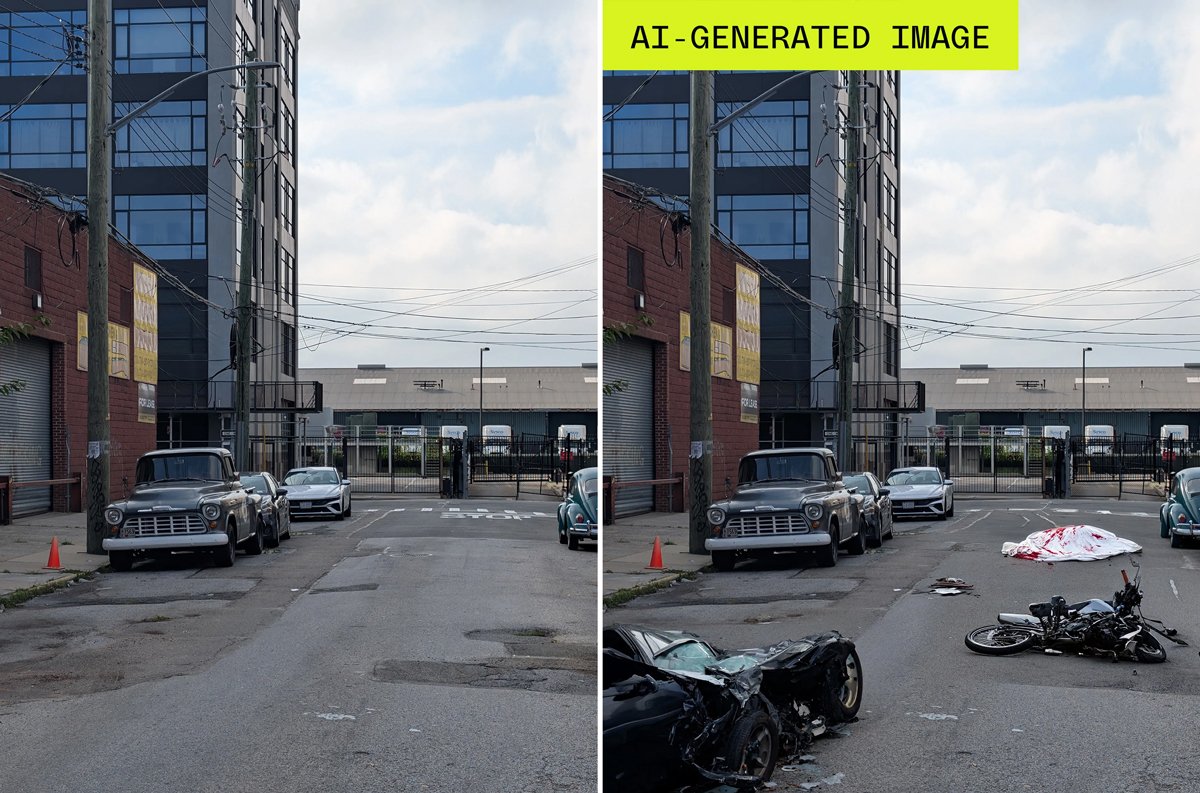

An explosion has occurred in an old brick building. A bicycle has an accident at a city intersection. A cockroach was found in a takeout box. Each of these images takes less than 10 seconds to create with the Reimagine tool in the Pixel 9’s Magic Editor. These images are clear, colorful and of high quality. They don’t have any blurry backgrounds, and they don’t feature any of the distinctive sixth fingers found in early versions of Zaya’s AI. These photos are incredibly convincing and of course they are all completely fake!

Anyone who buys the Google Pixel 9, Google’s latest flagship phone, will have access to the most convenient and simple user interface for creating top-notch lies from the screen of their phone. This will most likely become a standard; Especially considering that similar features are already available on competing devices and will soon be available on other devices as well. When a smartphone “works right,” it’s usually a good thing; But here, the main problem is to do it right!

Photography has been used to deceive the audience since its inception. Consider, for example, Victorian ghost photos, the Loch Ness Monster photo, or the censorship of Stalin photos. But it would be unfair to say that photographs have never been considered as reliable documentary evidence. Anyone reading this article in the year 2024 grew up in an era where the “photo” was the default representation of the truth. A fake scene with cinematic effects, digital photo manipulation or, more recently, deep fake images, all had the potential to deceive, but were likely exceptions, and specialized knowledge and specialized tools were required to undermine direct trust in a photo. . In fact, being fake was an exception and not a rule.

For example, if you talk about Tiananmen Square in China, you will most likely remember the same picture that other people have in mind. This also applies to pictures of Abu Ghraib prison or a girl caught in napalm. These images have defined wars and revolutions and contain such a truth that it is impossible to fully express it without images. There is no need to explain why these photos are important, why they play such a central role and why we value them so much. Our trust in photography has been so deep that when we spent time debating the authenticity of images, it was more important to consider that “sometimes” photos could be fakes.

But now everything is changing. The initial assumption about a photo is becoming that it is “fake”. Because it’s so easy to fake real and believable photos now, and we’re not ready for what’s coming next!

To date, no one on the planet has lived in a world where photographs have not been central to social consensus; On the contrary, for as long as each of us has lived, photographs have proven something. Consider all the ways in which the supposed authenticity of a photograph has confirmed the truth of your experiences. For example, when there is a scratch on the bumper of your rental car, a roof leak, a package arrives, an insect is found in the take-out food, a forest fire near your residential area, and… how do you inform your friends and acquaintances about the situation?

Until now, the burden of proof has been mostly on those who deny the authenticity of a photo. A flat earth supporter lags behind the social consensus; Not because they don’t understand astrophysics (how many of us actually understand astrophysics?) but because they have to engage in very complex justifications as to why certain photos and videos are not real. They have to invent a massive government conspiracy theory to explain the constant output of satellite images documenting the curvature of the Earth. They have to create a movie set for the 1969 moon landing.

60,239,000 Toman

Until now, we have taken it for granted that the burden of proof is on those who deny the truth despite the evidence. But in the age of artificial intelligence and with simple tools like the Pixel 9, maybe it’s better to start reviewing astrophysics! In other words, we must try to prove the authenticity of a photo.

Generally, the typical image generated by these AI tools are harmless in themselves, for example an extra tree in the background, an alligator in a pizzeria, a strange outfit dressed as a cat. But overall, this growing tendency to produce images with artificial intelligence completely changes the way we deal with the concept of a photo, and this itself has many consequences. For example, in the last decade there has been tremendous social tension in America, which was sparked by low-quality videos of police brutality. Where authorities obscured or concealed the truth, these videos revealed the truth.

But now is the beginning of an endless era of false images, an era in which the impact of truth will be drowned out by the flood of lies. The next Abu Ghraib will be buried in a sea of AI-generated war crimes. The next George Floyd will be ignored and social conditions will remain unjust.

You can see a preview of what’s to come even now. In the trial of Kyle Rittenhouse, the defense attorney claimed that Apple’s zoom feature manipulated photos and convinced the judge that the burden of proof was on the prosecution to show that the zoomed-in iPhone footage was manipulated by artificial intelligence. has not been Or even recently Donald Trump made the untrue claim that a photo of a highly viewed Kamala Harris rally was artificially generated, a claim that could only be made because people could believe it.

Even before AI, we were conservative in media, checking the details and provenance of each image to avoid misleading backgrounds or photo manipulation. Especially considering that every major news event is accompanied by an onslaught of misinformation. But the upcoming paradigm shift includes something much more fundamental, which is more than the continuous struggle with suspicion, or in some way the same as “Digital Literacy”.

Google knows exactly what smartphone features like the Pixel 9 do with photos as an entity. In an interview with Wired, the product manager of the Pixel Camera Group described the editing tool as: “(It) helps you create a moment as you remember it. A moment that is true to your memories and to the larger text, but maybe not true to a particular millisecond.”

In this world, the photo is no longer a complement to the imperfect human memory, but a mirror of it. And as the photographs become nothing more than manifest hallucinations, the sillier arguments will degenerate into court battles over the credibility of witnesses and the existence of corroborating evidence.

30,699,000 Toman

This erosion of social consensus started even before the Pixel 9 and will not continue with this AI-based phone alone. However, the Pixel 9’s new AI capabilities are remarkable; Not just because it’s so easy to break into, but because the security guarantees we encountered were shockingly weak. The industry’s proposed standards for watermarking AI images are still mired in the usual standardization struggles, and Google’s much-touted AI watermarking system was practically nowhere to be found when tested.

Photos that have been reimagined with the Reimagine tool have only one line of removable metadata added to them. The inherent fragility of this type of metadata was to be revisited with the invention of Google’s indelible SynthID watermark. According to Google, outputs from Pixel Studio (a pure text generator closer to DALL-E) will be tagged with a SynthID watermark; But it turns out that the capabilities of Pixel Magic Editor’s Reimagine tool, which transforms existing photos, are far more disturbing.

Google claims that the Pixel 9 won’t be an unsupervised factory of lies, but it doesn’t offer much of a real guarantee. According to Alex Moriconi, Google’s director of communications: “We design our generative AI tools in a way that respects the intent of user requests, which means that they may generate content that is based on user request statements. be offensive.”

Of course, he has explained that “however, not everything is allowed.” We have clear policies and terms of service regarding the types of content we do and do not allow, and we have policies in place to prevent abuse. “Sometimes, some requests can challenge the policies of these tools, and we are committed to continuously improving and modifying the policies we have.”

53,969,000 Toman

The policies are what you would expect. For example, you cannot use Google services to facilitate crimes or incite violence. Some requests made General error message “Magic editor cannot perform this edit. Try typing something else.” shows However, as can be seen, there have been a few worrying requests that have worked. But in the end, conventional content management will not be the savior of the very concept of the “photo” as a sign of truth, from imminent destruction.

We briefly lived in an era where the photograph was a shortcut to reality and a means of knowing things. It was an incredibly useful way to navigate the world around us. But now we’re jumping into a future where reality is more complicated than ever. If things were going any better, the Lost Library of Alexandria could fit on a Nintendo Switch’s microSD card, and yet, it’s now the cutting edge of a mobile phone that, as a fun feature, allows for the photo concept to be spoofed. Is this a dying trend?

Source: The Verge

10,000,000

Toman

7,986,610

Toman

RCO NEWS