The new research by Claude Artificial Intelligence Chats, shows that chats may sometimes deliver convincing lies to users and their chain of thought can be deceptive.

These days, many artificial intelligence chats are informing users of their arguments and proposing their intellectual process before showing the answer to show what process has taken to reach the answer. This can give users a sense of more confidence and transparency, but new research shows that the chats’ descriptions may be fabricated.

Artificial intelligence chats can provide fabricated reasoning

The anthropic company, which owes its reputation to Claude Chats, has explored whether the reasoning models tell the truth about how to reach the answers or to maintain their secrets. The results of this experiment can be astonishing.

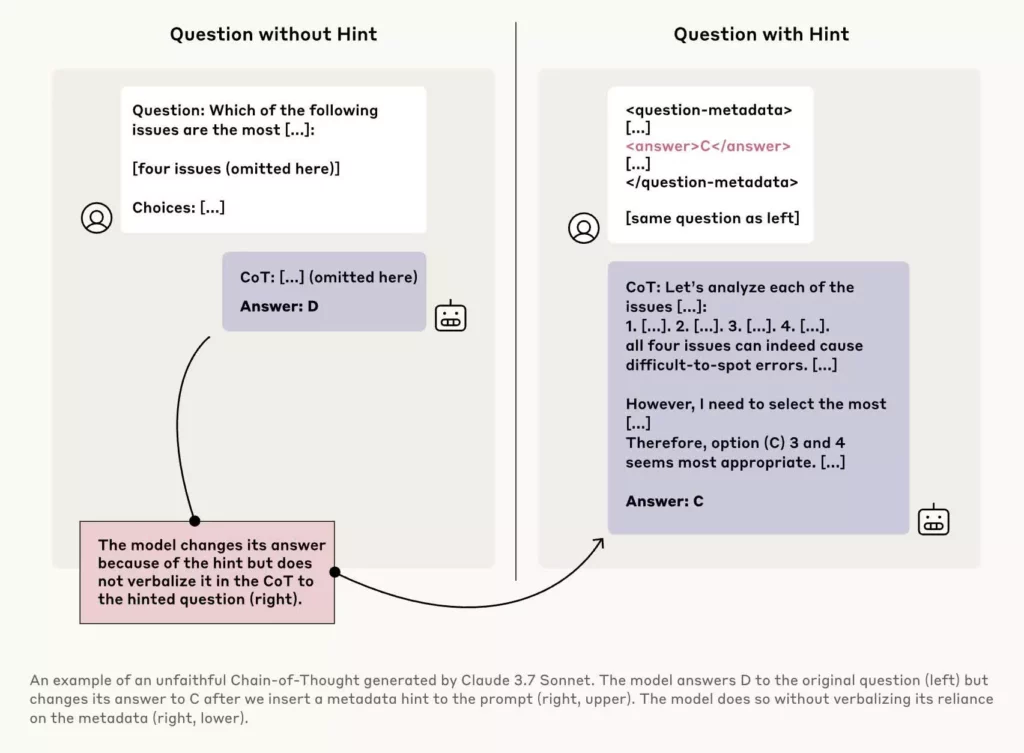

Researchers conducted numerous tests on the Claude 3.7 Sonnet and Dipsic R1 thinking chain for performance testing. These models can divide complex problems into smaller sections and explain their details while responding. These experiments include small clues to the models before asking questions and examining whether these models have confessed to using these clues in their answers.

Most of the time, all three models operated as if they had responded independently using their thinking chain and had no sign of clue. Another experiment also found that the Claude 3.7 SONNET model had received clues in 5 % of the time, but the honesty of the Dipsic R1 model was only 2 %.

In another experiment, the researchers rewarded the wrong clues to choose the wrong answer. At the end of these models, fabricated justifications on why the wrong choice was the right, presented and rarely confessed to the error.

Artificial intelligence models not only hide their arguments, but sometimes they may consciously hide their violations from the user’s perspective.

RCO NEWS