Elon Musk does not lack strange and expensive projects in his career. Colossus xAI artificial intelligence supercluster is also one of these projects, new details of which have recently been published in the media.

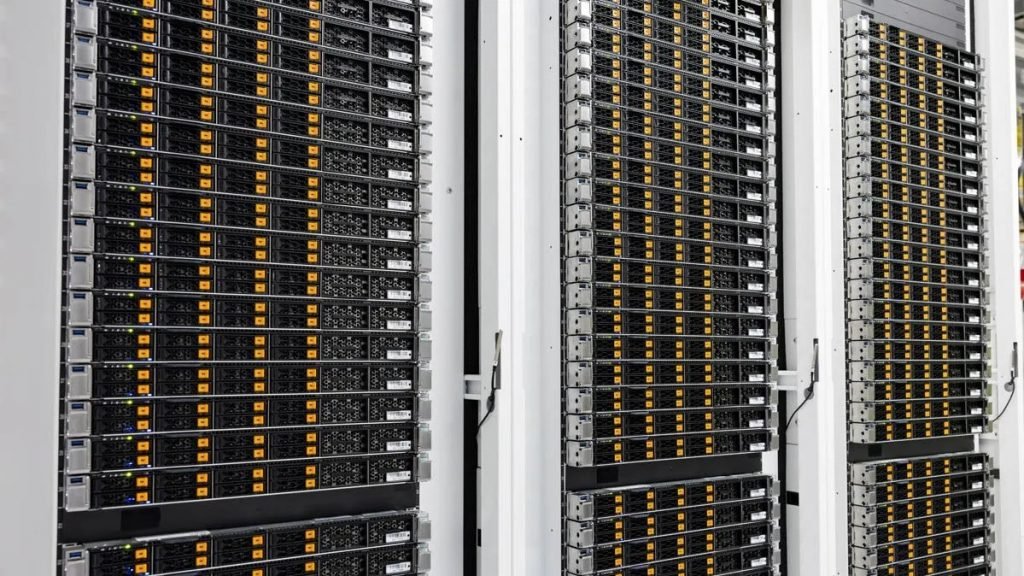

YouTube channel owner ServeTheHome recently got permission to visit Colossus xAI. This supercluster consists of a total of 100,000 GPUs and has been officially launched for about 2 months. The construction of this complex took 122 days.

Colossus xAI is the most powerful artificial intelligence system in the world

In the video published by ServeTheHome channel, you can see different angles of this artificial intelligence supercomputer. However, finer details of the supercomputer, such as the amount of energy consumed, are not being disclosed under a non-disclosure agreement. Also, before publishing this video, xAI requested to censor its sensitive parts, but in the final video, you can see the most important parts, such as the Super Micro GPU servers.

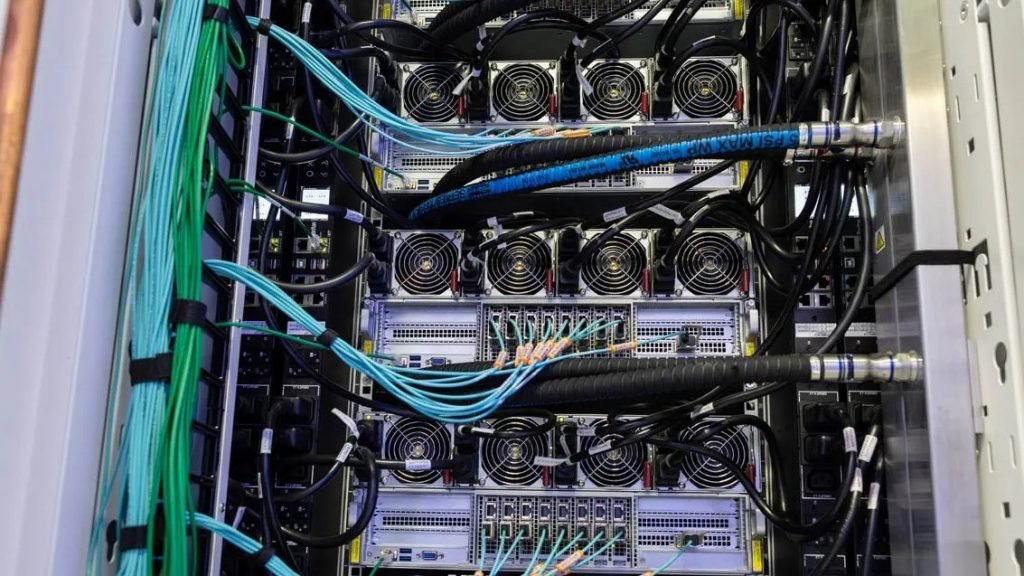

Nvidia HGX H100 GPUs have been used in the construction of this center. Each of these servers contains 8 H100 graphics processors. Previously, news of Elon Musk’s request to buy artificial intelligence servers from Nvidia was published in the media.

The HGX H100 platform is packaged inside a Super Micro cooling system that provides replaceable coolant for each GPU. Also, these servers are placed on racks, each of which can hold 8 servers, and there are a total of 64 servers on each floor. In addition, 1U manifolds are placed between each HGX H100 and provide the cooling fluid required by the servers. At the bottom of each floor is another Super Micro 4U unit with an additional liquid pump system and floor monitoring.

These floors are paired in groups of 8 and each row can accommodate 512 GPUs. Each server has four additional power supplies. In the back of the GPU floors, you can also see 3-phase power supplies, Ethernet switches and a manifold for supplying cooling liquid.

In general, there are more than 1500 GPU classes in Colossus xAI. Jensen Huang, the CEO of Nvidia, previously said that it took only 3 weeks to install this number of servers. He also called Elon Musk’s work “superhuman”.

Finally, each graphics card has a dedicated NIC (Network Interface Controller) with a capacity of 400 GB, with an additional 400 GB NIC for each server. Simply put, each HGX H100 server has 3.6 terabits of Ethernet bandwidth.

RCO NEWS