The artificial intelligence chip Maia 100 is one of the latest products from Microsoft, which puts the company in direct competition with companies such as Nvidia and Intel. Microsoft has now published more details of this chip.

According to Neowin, in 2023, Microsoft for the first time unveiled its plans to produce a dedicated artificial intelligence chip. Earlier this year, at the Microsoft Build conference, some details of this chip were made available to the public. Now, the full details of Maia 100 artificial intelligence chip have been released during the Hot Chips event.

Details of Microsoft’s Maia 100 artificial intelligence chip

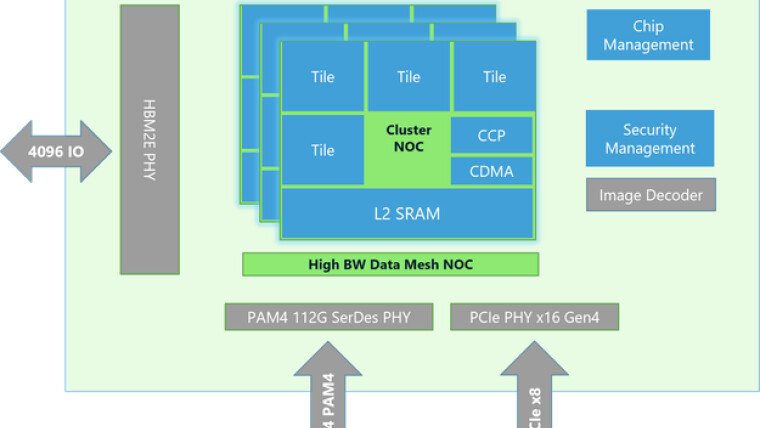

Therefore, the Maia 100 artificial intelligence chip is built according to TSMC’s 5nm architecture and is exclusively used to perform large-scale tasks in Microsoft Azure, and it also has the ability to support OpenAI models. The architecture of this chip utilizes custom server boards, racks, and special software to provide a cost-effective, high-end solution for performing artificial intelligence operations.

Also, the Maia 100 chip is designed in such a way that while performing artificial intelligence activities at the highest possible level, it can also manage its overall energy consumption. This accelerator has 64 GB of HBM2E memory, which is one step lower than the 80 GB of memory of Nvidia’s H100 chip.

According to Microsoft, the Maia 100 AI chip architecture is equipped with a high-speed tensor processing unit (16xRx16), which can provide high processing speed for training and inference while supporting various types of data.

Microsoft has also announced that it will also make the Maia SDK available to developers. This kit includes tools that allow developers to port models previously written in Pytorch and Triton to this platform. This SDK also includes framework integration, developer tools, two programming models, and a compiler.

RCO NEWS