Apple has published new research that shows how large-scale linguistic models (LLM) can analyze voice and gesture data to gain better insight into user activities.

A new paper titled “Using LLMs to Integrate Multisensory Sensors into Activity Recognition” provides information on how Apple might use LLM analysis alongside traditional sensor data to more accurately understand user activity. According to the researchers, this method has a high potential to increase the accuracy of activity analysis even when there is not enough data from the sensor.

Large linguistic models can identify the type of user activity with less data

In this research, it was found that large language models have a very remarkable ability to infer user activities through audio and motion signals, even if they are not specifically trained for this task. Also, when given only one example, their accuracy increases even more.

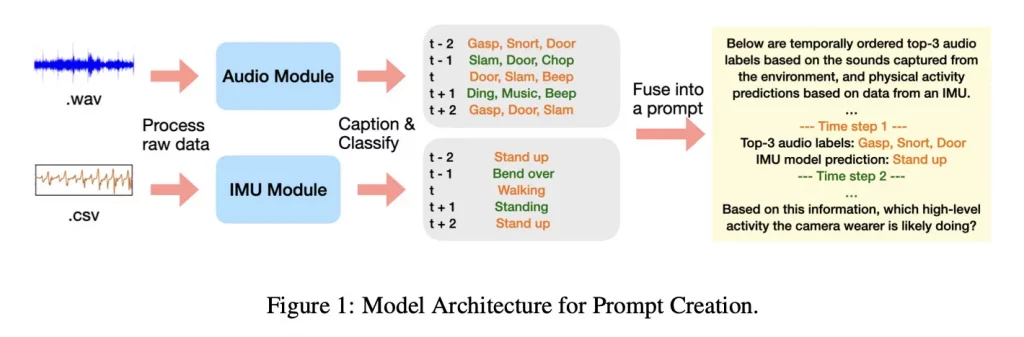

An important difference is that in this study, LLM did not receive the actual audio file itself, but was given short textual descriptions generated by audio models and an IMU-based motion model. The IMU, or Inertial Measurement Device, tracks motion through accelerometer and gyroscope data.

In this paper, the researchers explain that they used Ego4D (a massive dataset of media captured from a first-person perspective). This data contains thousands of hours of information from real-world environments and situations, from household chores to outdoor activities.

The researchers passed the audio and motion data through smaller models that produced text captions and class predictions, then fed these outputs to various LLM models such as the Gemina 2.5 Pro and the Qwen-32B to see how well they could identify activities.

Apple compared the performance of these models in two different situations; One when they were given a list of 12 possible activities to choose from and the other when no options were given.

The researchers conclude that the results of this study provide interesting information on how to combine multiple models to analyze activity and health data, especially in cases where raw sensor data alone is not sufficient to provide a clear picture of user activity.

RCO NEWS