Technology companies are looking for educational data to build larger artificial intelligence models, and this has raised concerns about users’ privacy. Google has now introduced the VaultGemma model, made with advanced techniques for privacy.

Vaultgemma is a experimental model that shows powerful artificial intelligence that is less likely to remember its sensitive educational data. This progress can change the way Google and other companies attitudes to privacy in future products of artificial intelligence.

Artificial IntelligenceO Vaultgemma Google

One of the biggest dangers of large language models is that sometimes instead of producing new content, parts of their educational data repeat the word in the word. If this data includes personal information or copyright content, it can lead to violations of privacy or serious legal problems.

Google’s solution to this problem is to use a technique called Differential Privacy. This procedure prevents the details of the model by injecting noise or calculated disorders during the training process. However, this technique has always had a big issue; Adding noise reduces the accuracy of the model and increases the need for computational power.

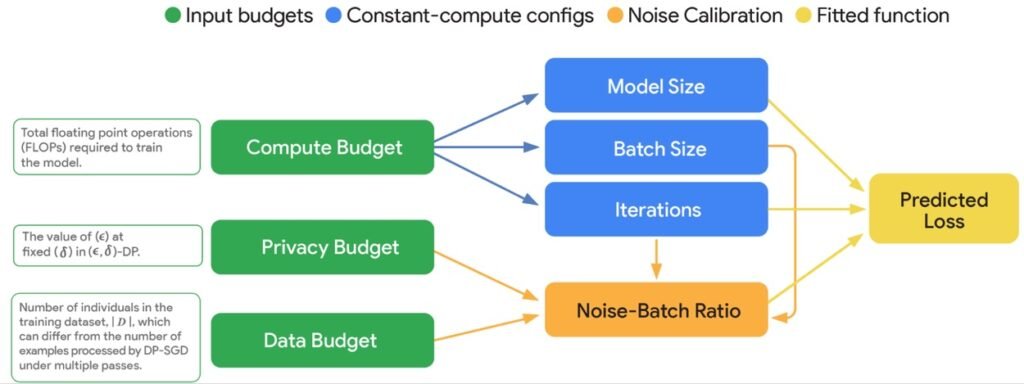

The main success of the Google Research Team was that they first discovered the “scalability rules” for these private models. They defined a formula to create an optimal balance between three important factors: privacy budget (noise rate), computational budget (processing power), and data budget (educational data volume). This allows developers to make their resources more efficiently to build private models.

Google says this technique will probably not be used in giant models and all the way in which the maximum performance performs the first word. Instead, this technique is much more suitable for smaller and targeted models that offer specific features in products (such as summarizing emails or offering better responses).

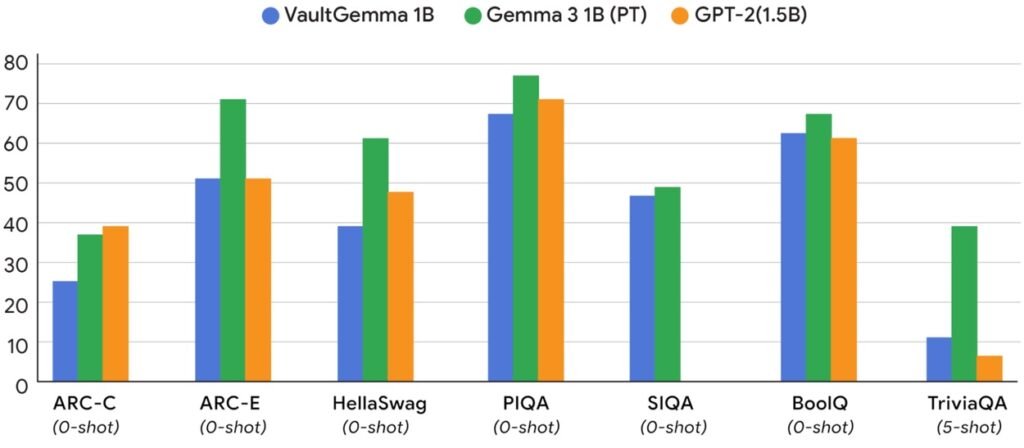

Vaultgemma is an Open-Weight model made based on the Gemma 2 model. This model is relatively small with only 2 billion parameters compared to today’s giants. This model is currently available for download on the Hugging Face and Kaggle platforms.

RCO NEWS