The creator of Claude’s popular artificial intelligence model, in a major policy change, announced that it would use users’ conversations and codes to train and improve their future models unless users explicitly oppose their opposition.

Anthropic has just updated its policies to use users’ conversations to teach its models. Also, the duration of the data of the users who agree to this issue, to Five years It increases. This new policy has raised serious concerns about privacy and how to satisfy users.

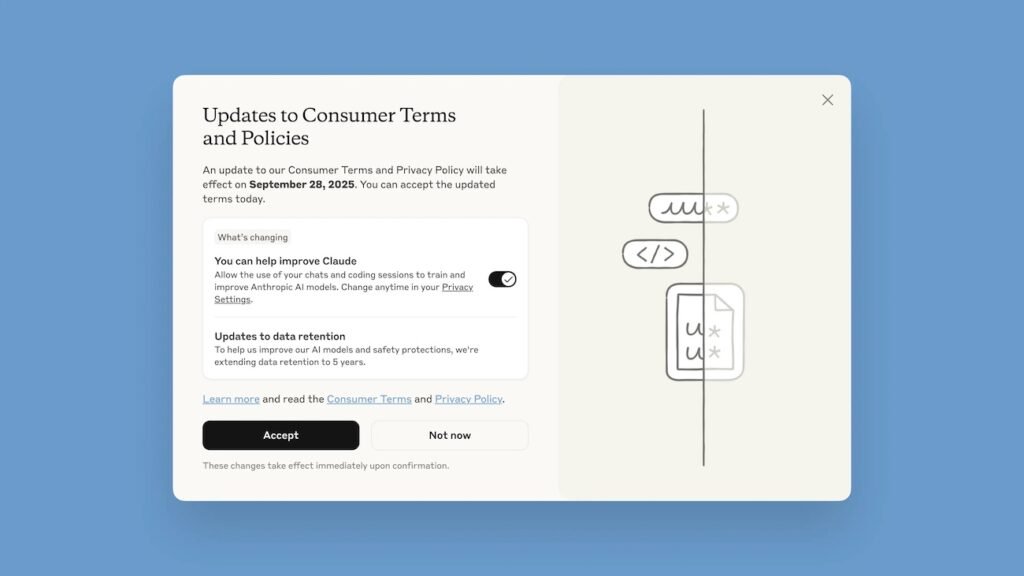

Now, when it comes to these chats, users are faced with a pop -up window entitled “Consumer Conditions and Policies”, which must finalize their decision by September 1. However, the design of this window has raised criticism.

Teaching anthropic artificial intelligence models with user conversations

A large and prominent “Accept” button is at the bottom of the new pop -up screen, while the main option is to allow data to be used for training, with a smaller font and as a power button below it. This button is set by default to the “bright” mode. This design makes many users agree to share their data by just clicking on the Big “Acceptance” button.

If you have agreed wrong, you can disable this option by referring to Settings, then the Privacy section and finally the “Help Improve Claude” section. It should be noted, however, that this change only affects future data and that the data previously used for training cannot be recaptured.

This update includes all users of Claude Consumer Subscribers, including Free Plan, Peru and Max. Anthropic, however, has emphasized that these changes include business and organizational users (such as Claud for Work and Education) as well as users who have access to Claude through other companies such as Amazon Bedrock and Google Cloud.

The most important thing is that this policy will only apply to new conversations or old conversations that are resumed. Your previous conversations will not be used for training until you continue.

Anthropics has stated that the purpose of this is to make artificial intelligence models more capable and useful. According to the company, users’ data will help improve the safety of models, more accurately identify harmful content and enhance skills such as coding and analysis.

The company has guaranteed that it uses a combination of automated tools and processes to filter or hide sensitive data and does not sell users’ data to any third party. Users who disagree with sharing their data will remain under the previous policy and their data will be deleted after 5 days.

RCO NEWS