The Apple Artificial Intelligence Research Team is developing an artificial intelligence agent that can describe street views for blind people. This tool will help the blind to obtain a description of a region.

Apple’s machine learning research section has recently published an article explaining a project called ScenesCout. Scenescout is an artificial intelligence agent based on a large -scale large -scale language model that can be used to view street view images, analyze what is seen, and describe it for the viewer.

Apple’s artificial intelligence agent describes different scenes for the blind

In this article, it is said that visually impaired people may refrain from traveling or moving because of their unfamiliarity with the new environment. There are now tools to describe the surroundings, such as the Microsoft SoundScape app that was introduced in year 6. However, all of these tools are designed for use at the same moment and in place and cannot be used for prior knowledge.

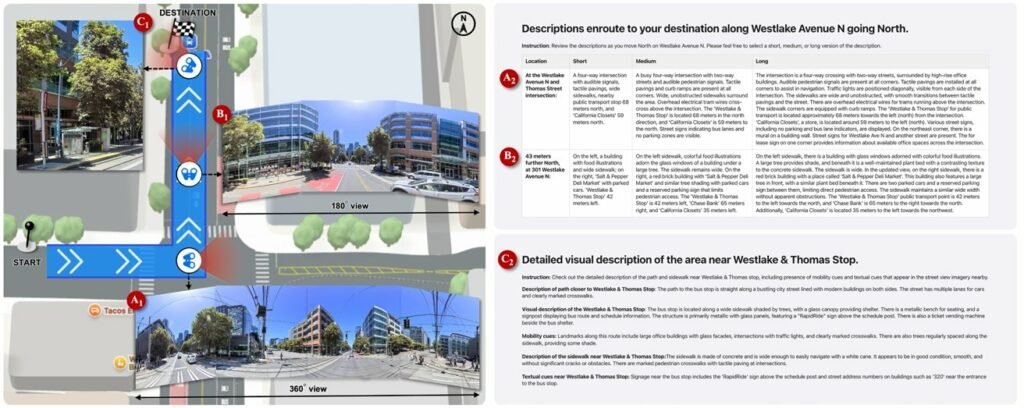

This is where the Scenescout comes into action. As a factor of artificial intelligence, Scenescout provides accessible interactions using street view images, allowing low or blind people to receive a description of a area before traveling.

Scenescout has two modes, one of which is called “Route Preview” and provides details of the elements he views along the way. For example, it can tell the user that there are trees in a screw and remind them of other tangible and detectable elements.

The second is the “virtual exploration” that allows him to move freely in street view images and describe the elements in the environment as the user’s virtual move.

A study found that Scenescout is very useful for visually impaired people because it provides information that cannot be accessed by existing methods.

Scenescout also has a 5 % accuracy in the overall description and 2 % accuracy in describing sustainable visual elements. However, some minor errors have been found in the work.

RCO NEWS