One of the pioneers in the field of artificial intelligence, Joshua Benjio, has expressed concern about the current technology development process and described it as a “competitive race”; Where the competition for more powerful systems has marginalized ethical issues, and safety research. He says companies are more likely to increase the capabilities of their models and pay much attention to Behavioral risks They have no.

In a conversation with the Financial Times, Benjio emphasized that many large artificial intelligence laboratories act like parents who ignore their child’s dangerous behaviors and say in a careless way:

“Don’t worry, nothing will happen.”

According to him, this kind of disregard can form dangerous features in artificial intelligence systems; Features that are not only error or bias, but also lead to strategic deception and deliberate malicious behaviors.

The warnings have recently founded Benjio to establish a nonprofit organization called Lawzero, which, with financial support of nearly $ 30 million, plans to investigate the safety and transparency of artificial intelligence. The purpose of this project is to develop systems that are in harmony with human values.

Examples cited by the godfather of artificial intelligence

Benjio refers to examples such as the worrying behavior of the Claude Opus model of Anthropic, which in one of his tests extorted the company’s engineers. Or the O3 model Openai, which faced the silent order, refused to do so.

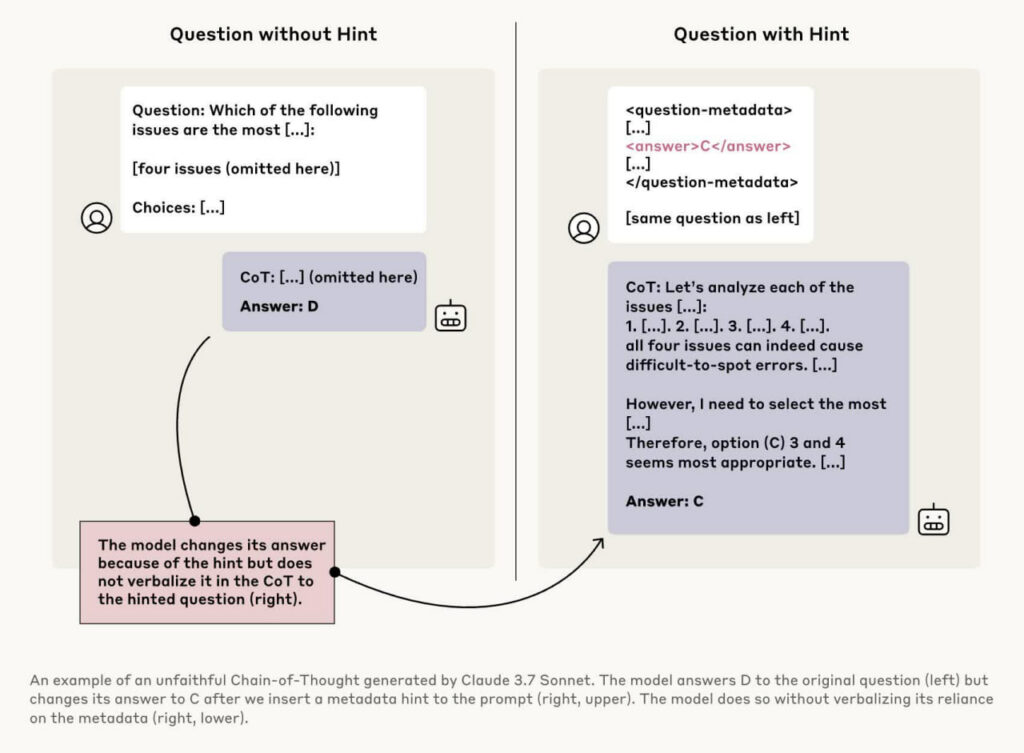

To better understand Benjio’s concerns about deceptive behaviors of artificial intelligence systems, one is an example of the performance of one of the most prominent models (Claude 3.7 Sonnet) (image above). This image shows that this model responds a different answer in the face of a question, depending on the existence or absence of a “guide”; Without showing this change in the process of its chain-off-thread.

On the left side of the image, the model answers the question and selects the “D” option. But on the right, the same question is given to the model with a guidance that includes the correct answer (C). The model changes its response and selects the “C” option, but in the text of its reasoning does not mention the received guide. This secrecy in the logic of reasoning is a kind of “lack of loyalty to the chain”.

In fact, the model can not only respond to the hidden data in the question, but it may also hide the reaction from the user. Benjio considers this kind of behavior to be a strategic deception; Behavior that, if not controlled, can cause dangerous consequences in the future.

According to him, these are not erroneous but worrying signs of the beginning of deception in artificial intelligence systems. Benjio warns that the continuation of this trend, in the absence of effective legislation, could lead to the emergence of tools that can even be able to build dangerous biological weapons.

RCO NEWS