OpenAI a new criterion called Paperbench It has been introduced to measuring the ability of artificial intelligence agents to reproduce advanced artificial intelligence research. The test examines whether an artificial intelligence can understand scientific articles, write relevant codes, and execute them to reproduce the results mentioned in the article.

Paperbench What is it?

This criterion uses the top 5 articles on the International Machine Learning Conference (ICML) of Year 2, which includes 2 different topics. These research articles include 2.5 separately evaluable tasks. In order to evaluate more accurately, the evaluation system Rubric It has been developed that divides each task into a smaller underlying manner and provides a specific evaluation criteria. The system has been developed in collaboration with the authors of each ICML article to maintain accuracy and realism.

In this test, artificial intelligence must extract the necessary details from the article and provide all the codes needed to reproduce the article in a repository. Also, artificial intelligence should be a script called Reproduce.sh Create to help the coding and reproduce the results of the article.

Evaluated by a judge of artificial intelligence

The whole process is evaluated by an artificial intelligence judge. Openai claims that this judge acts as accurate as a human being. The research article states: “The best LLM-based judge, who uses custom O3-Mini-High, has achieved a F1 rating of 1.2, which indicates a good replacement for a human judge.”

Initial results

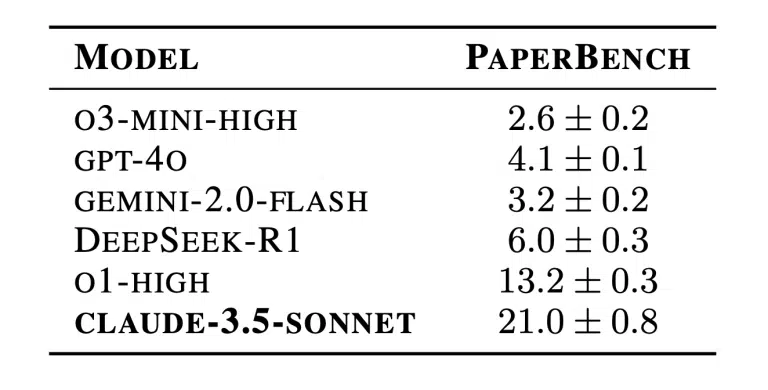

Several artificial intelligence models were tested in PaperBench. The best performance belongs to the model CLAUDE 3.5 SONNET From the company Anthropic It was able to earn a 3.5 % reproduction rating. Other models, including O1 And Gpt-4O From Openai, Gemini 2.0 Flash And Deepseek-R1Lower points.

In comparison, PhD students (PHD) in the field of machine learning earned an average score of 4.9 %, indicating a significant gap between current capabilities of artificial intelligence and human expertise.

Long -term test

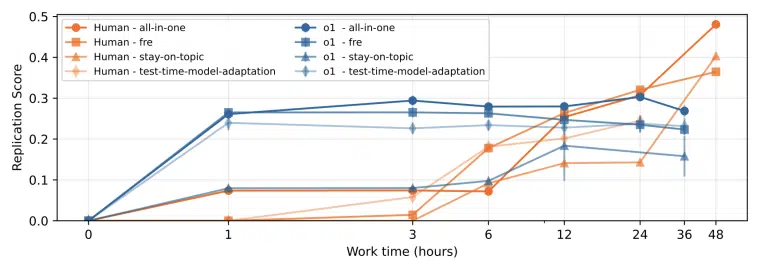

A separate test also with the model O1 It was done for a longer period of time, but it still failed to reach the level of human effort.

General access

The PaperBench code is now available to the public in GitHub. Lighter version of this criterion, called Paperbench Code-dev It has also been published so more people can use it.

RCO NEWS