A new study shows that the 3.5 % of the texts that the Dipsic artificial intelligence model produces are of significant similarity to ChatGPT outputs in terms of style. These findings can be a sign that Deepseek has used Openai outputs in its training process.

According to the Forbes site, the study was conducted by Copyleaks, activist in the field of artificial intelligence -based content identification. According to the company, the results of this study could have important consequences for intellectual property rights, legislation and artificial intelligence development in the future.

Similarity of Dipsic style writing to openai

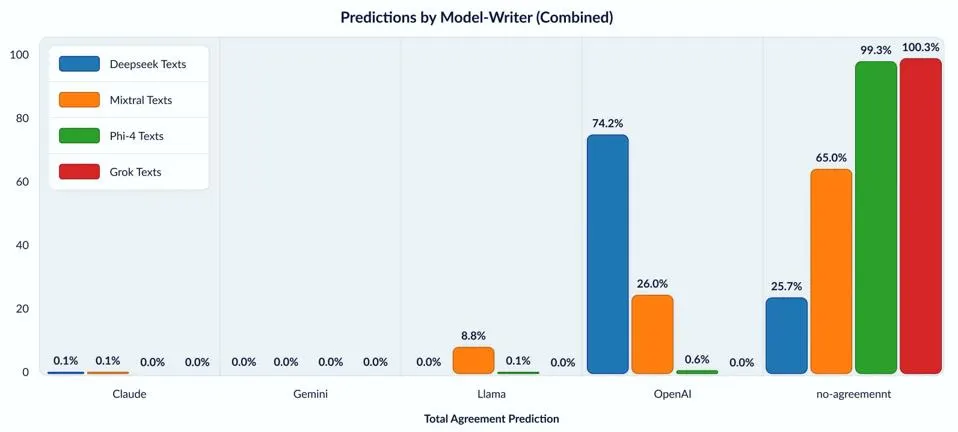

In this study, Copyleaks uses screening technology and categorization algorithms to identify different language models, including Openai, Claude, Jina, LLAMA and Deepseek. This categorization is done by the consensus voting method to minimize the probability of error and increase accuracy.

The point was that the texts that most models produced had a unique style, but a significant portion of Deepseek’s outputs were similar to OpenAI outputs.

In an email interview, Shay Nissan, the head of the Copyleaks Data Science Department, explained that the study can be seen as the work of a scientist who attempts to identify a handwriting text by comparing it with the handwriting of others. The results of this study are surprising and very important.

OpenAI’s possibility of violations of intellectual property rights

Nissan emphasizes that this is not the most definitive evidence for Dipsic’s direct use of OpenAI outputs, but it raises serious questions about the training process and data resources of this model.

If Deepseek is determined to use Openai -made texts to teach its model without authorization, it will have important legal consequences in violating intellectual property and violating Openai service conditions. The lack of transparency about educational data in the artificial intelligence industry deepen this challenge and highlights the need for specific surveillance frameworks to disclose educational resources.

Moral and legal challenge

Although Openai itself has been criticized for using web content without explicit permission, the similarity of the Depsic style to ChatGpt adds new dimensions to the discussion. In the absence of specific legal procedures, it is difficult to pursue such cases, but tools such as fingerprint identification can be a powerful sign of tracking and investigating possible violations.

While some experts are likely to gradually reach close -ups due to the use of similar data, Copyleaks says their consensus is designed to detect delicate light differences, and this similarity cannot be attributed to data overlap.

In the end, Nissan has emphasized that despite the possible sharing of educational data, model architecture, fine-tuning methods and content production techniques in each model are unique. This makes the fingerprint of each model different from the other.

It is still unclear whether Deepseek really uses unauthorized Openai outputs, but these questions will certainly be a serious part of artificial development and arrangement discussions in the near future. Deepseek has not yet responded to requests.

RCO NEWS