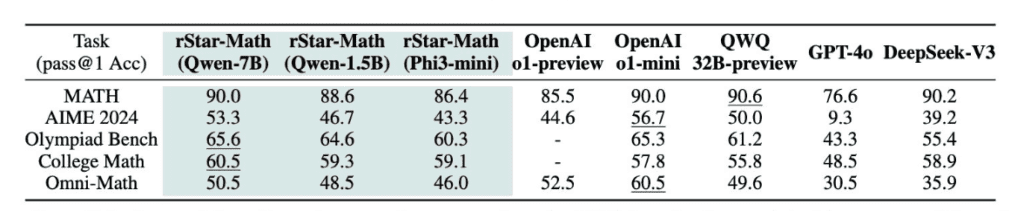

Microsoft has developed a new method, called rStar-Math, that allows Small Language Models (SLM) to solve complex mathematical problems with high accuracy and even better performance than larger models such as OpenAI’s o1. Instead of relying on knowledge transfer from larger models, the rStar-Math method allows small models to improve independently through automatic evolution.

“Our work shows that small language models can achieve advanced performance in mathematical reasoning through self-evolution and step-by-step scrutiny,” the researchers wrote in their paper.

Why is this important?

Features of rStar-Math

RCO NEWS