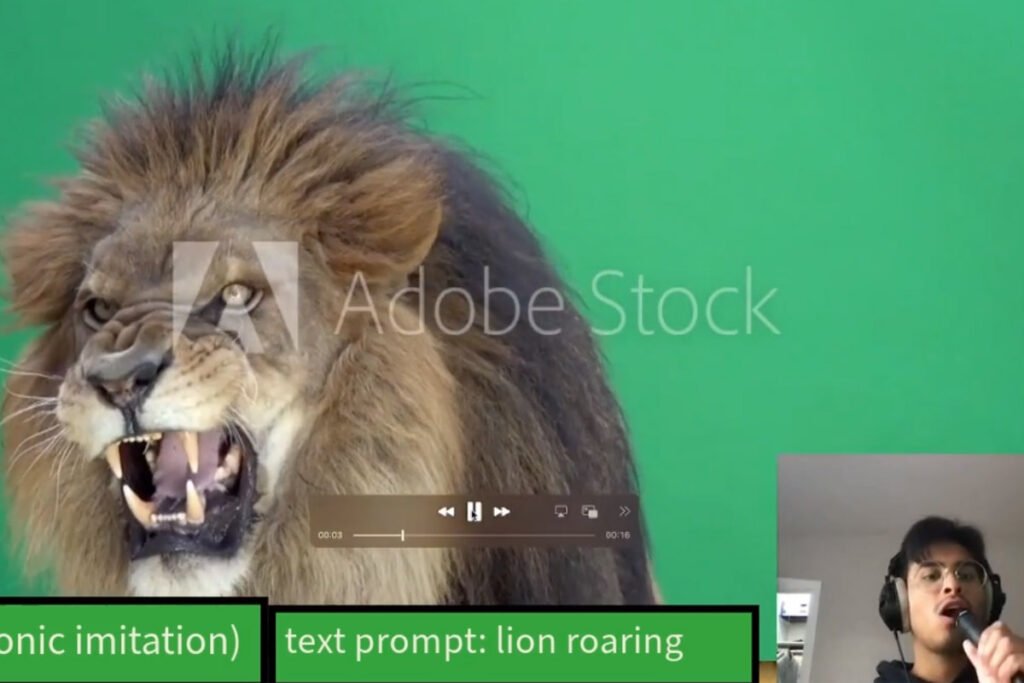

Adobe Research Lab and Northwestern University researchers have developed an artificial intelligence system called Sketch2Sound. With the help of this system, sound designers can create professional sound effects and unique sound spaces with just whispers, voice imitations and text descriptions. This system allows designers to create complex sound effects with just simple voice or text inputs.

Audio input processing simple To produce accurate sounds

According to The-Decoder, the Sketch2Sound system uses accurate audio inputs and analyzes 3 key sound characteristics: loudness, color (which determines how bright or dark the sound is) and tone. These features are then combined with textual descriptions to create a sound that exactly matches the user’s needs; For example, if the user enters the text command forest space and makes short sounds with his mouth, the system will automatically convert this hum into the sound of birds.

One of the interesting features of Sketch2Sound is that it can correctly recognize the context and meaning of user inputs; For example, when the user creates a drum pattern, the automatic system uses low notes to create a bass drum sound and high notes to create a snare drum sound.

With this tool, sound designers can precisely control the sounds produced. Adobe’s research team has added features to the Sketch2Sound tool to filter the produced sound, so that designers can control sounds precisely or roughly according to their needs. This feature can be very useful for professional sound artists (those who create sound effects for movies); Because they can use their voice to create desired effects faster and easier.

Although Sketch2Sound provides a wide range of possibilities for sound designers, the researchers say that spatial sound characteristics can sometimes cause problems in sound production, and the research team is trying to solve these problems. Adobe has not yet announced a specific date for the commercial release of this tool.

RCO NEWS