A few days ago, OpenAI unveiled its advanced o3 model. Only a few people have tested it but the benchmarks show its impressive performance. This model shows that it is still possible to produce more advanced models by increasing the scale of the training data, but the issue of the very high cost of this model to produce each answer is raised.

According to the TechCrunch report, OpenAI has used a method called “training time scaling” or Test-time scaling to train the o3 model, and the benchmarks also show the success of this method; For example, the o3 model scored 25% in a difficult math test where no other AI model scored more than 2%.

The strangest thing is that according to “Noam Brown”, one of the creators of OpenAI models, the company introduced the new o3 model just 3 months after the o1 was unveiled, which is relatively short for such a big leap.

High cost of o3 model to generate each response

Some experts in the field of artificial intelligence, such as “Ilya Satskiur”, one of the founders of OpenAI, believe that the current method of training artificial intelligence models has reached a dead end and stronger models cannot be produced with this method. Recently, the o3 model has been able to provide much better performance than its predecessor in a short period of time.

“Jack Clarke”, one of the founders of Anthropic, also said in a blog post yesterday that o3 is proof that 2025 will be faster than 2024. In the coming year, Clark says, the world of artificial intelligence will combine test-time scaling and traditional scaling methods to get more efficiency out of AI models.

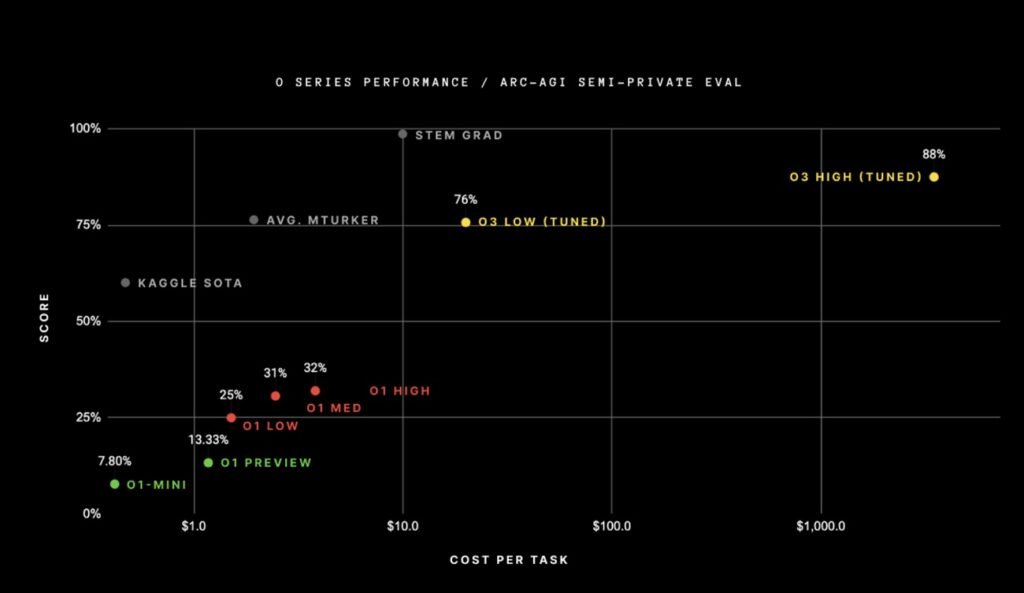

Training time scaling means that OpenAI uses more processing power in the inference stage of ChatGPT. Of course, it’s not exactly clear what’s going on behind the scenes: OpenAI either uses more and more powerful computer chips to answer the user’s question or runs those chips for longer (10-15 minutes in some cases), but whatever OpenAI does, It is very expensive; See the chart below:

Clark points to o3’s performance on the ARC-AGI benchmark (a tough test used to evaluate advances in artificial intelligence (AGI). Of course, according to the creators of this test, passing it does not mean that the artificial intelligence model will achieve AGI, but it is one of the ways to measure the progress towards this vague goal.

The scores of the o3 model in this benchmark are higher than all the previous models and managed to get 88% points; For example, the score of model o1 is about 32%. This may be good news, but the logarithmic x-axis of this chart is alarming. This model requires more than a thousand dollars to generate each response. While the cost of o1 model is about 5 dollars and o1-mini is only a few cents.

Although OpenAI uses expensive processing power for each response, there is no denying the extraordinary performance of its model, but we have to ask a few questions: if the o3 model costs so much, how much processing power does OpenAI want in the later models, o4 and o5? Slow down, at what cost? Of course, these costs eventually make the subscriptions of these models very expensive and not all users can use it.

RCO NEWS