Apple To improve the performance of your large linguistic models (LMMs) with collaboration Nvidia It has implemented a new technique for generating text that makes AI programs much faster.

Apple engineers have described the details of the company’s collaboration with Nvidia in a blog post. Earlier this year, the iPhone giant released its Recurrent Drafter (ReDrafter) technique as open source. This technique offers LLMs a new way to generate text that is significantly faster and offers “state-of-the-art performance”.

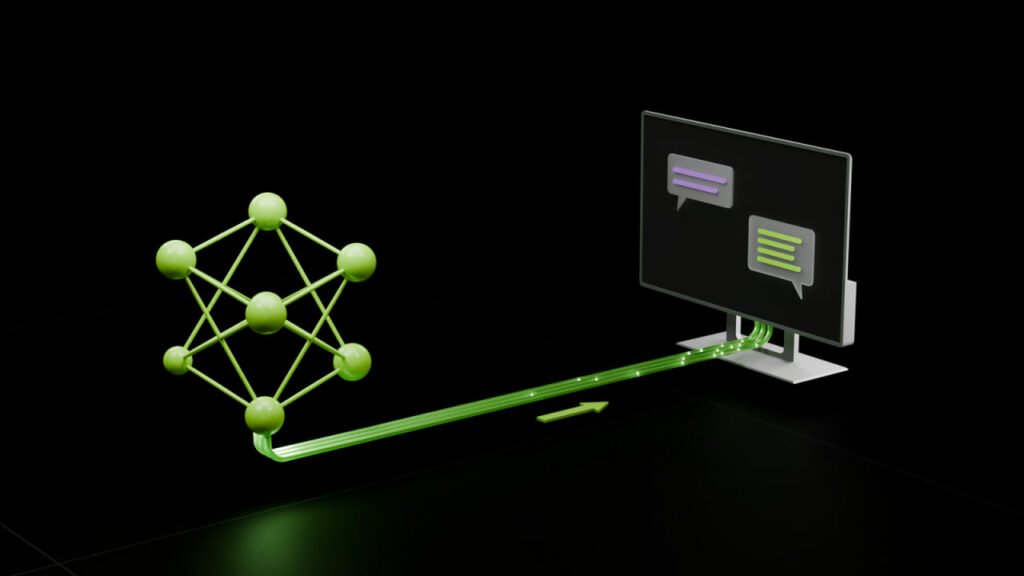

Apple explains in its post that the ReDrafter technique combines the two approaches of the local beam search algorithm and Tree Attention. Both techniques are designed to improve text generation performance.

Apple’s cooperation with Nvidia

Following its research, Apple has partnered with Nvidia to add ReDrafter to its TensorRT-LLM framework. TensorRT-LLM is a tool that helps large language models run faster on Nvidia GPUs. Another improvement in Apple’s technology is that it can reduce the latency rate and consume less energy.

Part of Apple’s statement reads:

“LLMs are increasingly used for production applications, and improving inference efficiency can have a large impact on computational costs and reduce latency for users. “With ReDrafter’s new approach now integrated into the NVIDIA TensorRT-LLM framework, developers on NVIDIA GPUs will benefit from faster token generation for their production applications.”

RCO NEWS