Google recently claimed that the company’s new artificial intelligence model is capable of recognizing people’s emotions. Experts are worried about the consequences of such a capability.

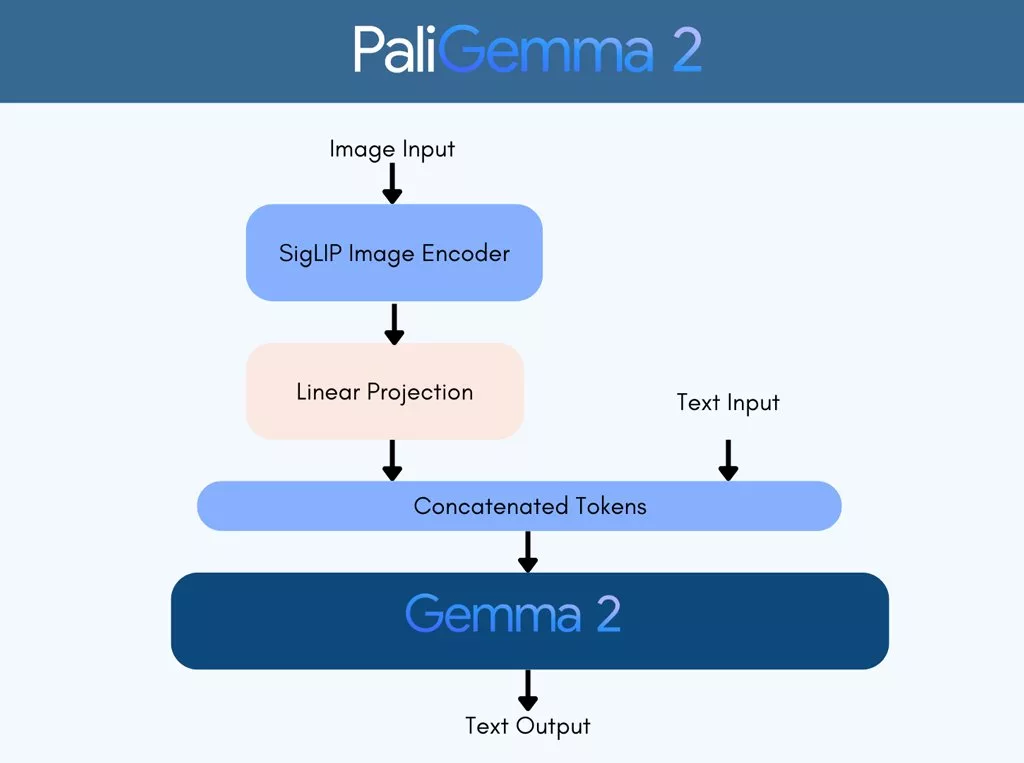

Google has recently unveiled a new family of artificial intelligence models called PaliGemma 2 that can analyze photos and identify their content. Automatic writing of descriptions after viewing photos and answering users’ questions about images is another feature of the mentioned model. Also, Google has claimed that this tool can not only recognize objects or actions in photos; It also has the ability to identify people’s feelings.

What are the risks of recognizing people’s feelings?

Google has mentioned that the feature of recognizing people’s feelings is not enabled by default in PaliGemma 2 and detailed settings must be made to benefit from it. It is also not yet clear how accurate the function in question is in Google’s new artificial intelligence. One of the important applications of such technology is to detect the emotions of customers and it can create a revolution in marketing. This issue has caused the concern of experts in this field. Google’s extensive activity in the field of Internet advertising and access to a huge amount of user data add to the concerns.

Research conducted in recent years has shown that most of the current emotion recognition systems are not very accurate. Even some of them attribute some feelings more to certain people; For example, negative emotions in one of them were detected in most cases for black people. However, Google has announced that it has conducted extensive investigations to ensure the absence of similar problems in its model.

It is not yet clear what exact standards Google has used to measure the accuracy and accuracy of the PaliGemma 2 model’s performance; But it is mentioned that in the FairFace benchmark, it was able to get a good score. The aforementioned benchmark is a set of images of people’s faces that are used to evaluate the performance of such services; But critics believe that the figures in it are not very diverse and its rating cannot be trusted.

The comprehensive law of artificial intelligence indicates that the use of emotion recognition tools is prohibited for institutions such as schools and companies; But regulatory bodies like the police can take advantage of them. Despite this ban, due to the release of PaliGemma 2 model codes in open source form, experts are worried about possible abuses of Google’s new model.

RCO NEWS