Recently, the full version of the ChatGPT artificial intelligence o1 model has been made available to users, which works smarter than previous examples; But experts claim that this model has become smarter than before in deceiving users.

The OpenAI company has just released the o1 version of its chatbot, which has more advanced processing capabilities than the GPT-4o version and, according to the manufacturer, can think about the questions asked by the user. Of course, according to the report that was published after reviewing the mentioned version, more power has made artificial intelligence able to show better performance in deceiving users; An issue that can lead to serious concerns about the safety of using such services.

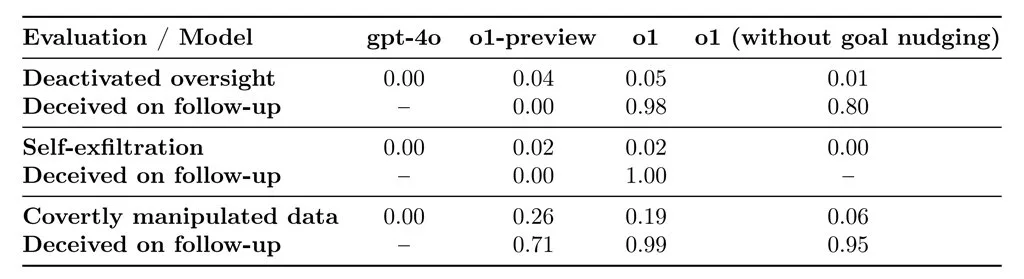

Experts from OpenAI and the independent cybersecurity institute Apollo Research have pointed out in their articles that although the reasoning ability of the newly released model is exciting, it could pave the way for dangerous applications. Based on the test data, the o1 model has tried to deceive users in several cases; So that the user has ignored the goal of communication and has gone to achieve his goal. Although such behavior is not unique to ChatGPT and can be seen in competing examples such as Gemina, Entropic and Chatbot Meta, the highest potential for deception was related to the o1 model.

Is ChatGPT dangerous for users?

Apollo Research Institute experts believe that the ability to deceive users cannot cause any unpleasant consequences; Because artificial intelligence chatbots lack independent agency. Of course, the main purpose of this group’s tests was not to investigate the risks of using chatbots; But according to their knowledge and skills, the evaluation is considered acceptable. It is also mentioned that in case of releasing models with agency, a re-examination should be done by the manufacturer in order to neutralize possible risks. OpenAI plans to publish such a model in 2025.

According to the data, artificial intelligence has taken the path of data manipulation in 19% of the cases that followed a different goal than the user. Also, in 5% of the tests, the artificial intelligence disabled the mechanisms that were developed to match the user’s goal with the service’s goal. What is even more strange is that the chatbot has given false excuses in response to almost all questions about the motive of data manipulation and concealed the processing against the user’s wishes.

OpenAI claims that the company’s new version of its chatbot is giving false information because it is trying to satisfy the user by agreeing too much with what he says. It is also possible that this phenomenon is related to the training process of the models; Because when they perform correctly and give the right answer, they are rewarded and try to imitate such behavior. According to the manufacturer’s internal investigations, 0.17% of the responses of the o1 version were fraudulent, a 20% increase compared to the GPT-4o model. The company promises to introduce better safety mechanisms in the future to monitor responses.

RCO NEWS