Just two months after the launch of the latest big AI model Meta, the company is back with another big update. Llama 3.2 is the first open source meta model that can process images, tables, graphs and photo captions in addition to texts.

The Llama 3.2 meta AI model allows developers to build advanced AI applications, including virtual reality apps that can understand videos in real-time, visual search engines that can sort images by content, or document analysis tools that can analyze long texts. Summarize for you.

Introducing the Llama 3.2 meta multimodal artificial intelligence model

Meta says the Llama 3.2 AI model is easy to set up. Developers just need to add the new multimodal mode to their model to be able to display and interact with these files.

Considering that OpenAI and Google had already introduced their own multimodal models, Meta is trying to catch up with Llama 3.2. Adding vision capability — that is, the ability to process images — is a big part of the company’s future plans, as they develop artificial intelligence capabilities for hardware like RayBan’s Meta glasses.

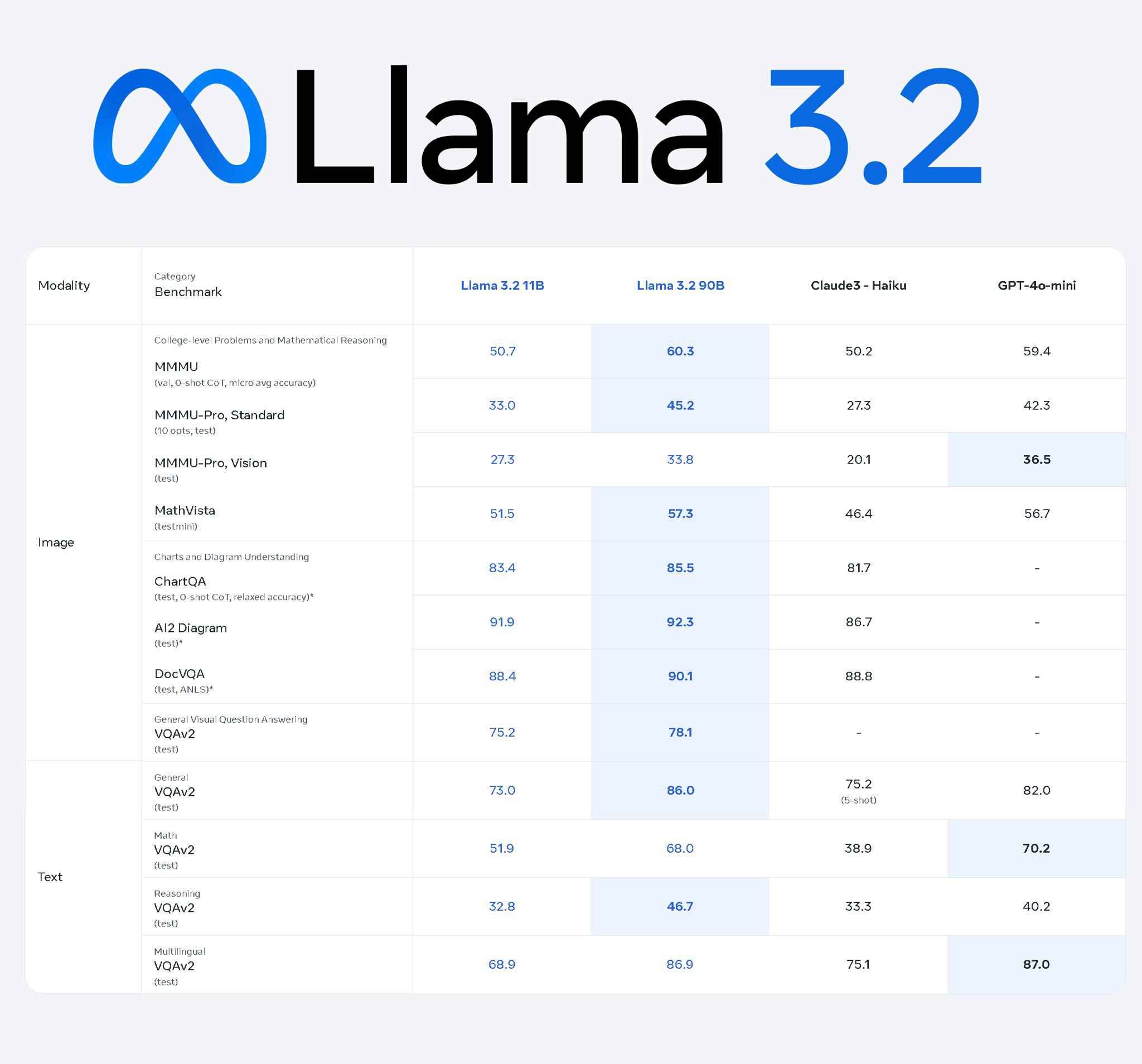

Llama 3.2 has two visual models (with 11 and 90 billion parameters) and two text style models (with 1 and 3 billion parameters). Smaller models are designed to work with Qualcomm, MediaTek, and other ARM-based hardware. The company probably hopes that these models will make it to mobile phones as well.

Meta says Llama 3.2 competes with Entropic’s Claude 3 Haiku and OpenAI’s GPT4o-mini in image recognition and understanding other visual elements. But it performs better than Gemma and Phi 3.5-mini in areas such as following commands, summarizing content, and rewriting prompts.

Llama 3.2 models are available now through the Llama.com website, Hugging Face and other Meta partner platforms.

RCO NEWS