You may not have heard of Viggle AI, but you’ve probably seen the viral memes created by the service. Viggle allows users to create short videos with the help of artificial intelligence. You can even use your own image to make a video.

What is Viggle?

Viggle has developed a 3D video model called JST-1, which the company says has a realistic understanding of physics. Viggle CEO Hong Chu says the main difference between Viggle and other AI video models is that Viggle allows users to specify the movement they want for the characters. Other AI video models tend to generate unrealistic and physics-defying movements for characters, but Chu claims Viggle’s models are different.

He said in an interview: “We are actually building a new type of graphics engine based on neural networks. Our model is completely different from existing video generators, which are mostly pixel-based and do not understand the structure and properties of physics. Our model is designed in such a way that it has such an understanding, and for this reason, it works much better in terms of controllability and production efficiency.”

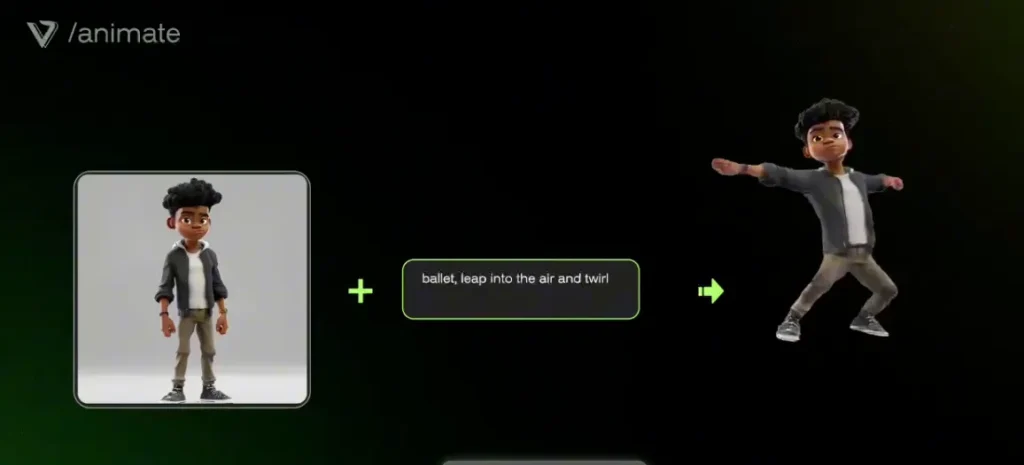

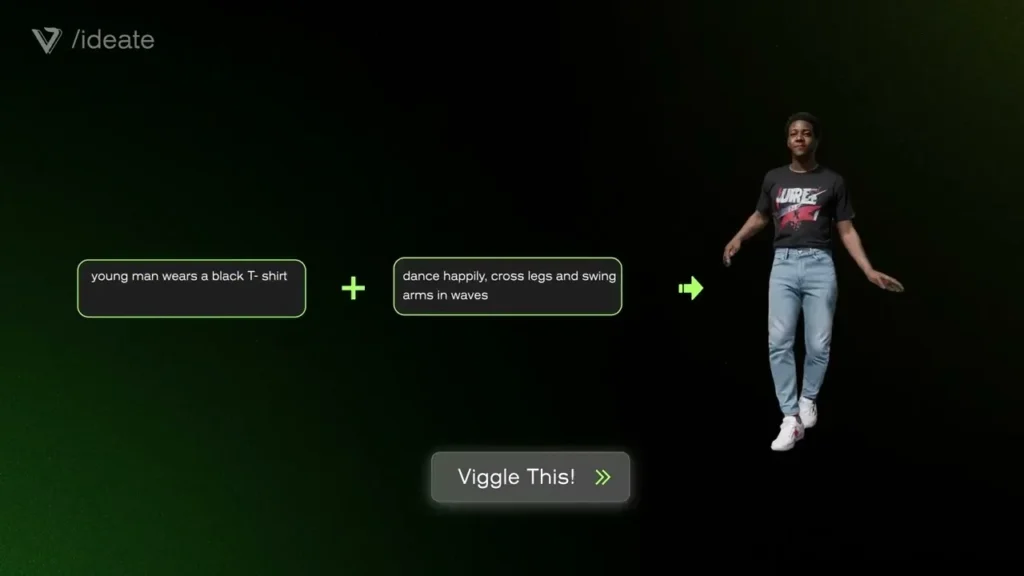

To make a video on this platform, you can give it your image or your desired subject along with a video so that this system will move your image similar to the video you gave it. Users can also upload images of characters with text messages including instructions on how to move them, or even create animated characters from scratch with just text prompts.

A tool for creativity

However, memes are only a small part of Viggle’s uses. Hong Chu says that the model is also widely accepted as a tool for visualizing ideas for designers and creatives. Although videos are still not perfect (they are sometimes shaky and faces are indistinct), this model has been effective for filmmakers, animators, and video game designers in turning their ideas into something visual. Currently, Viggle models only create characters, but Hong Chu hopes to create more complex videos in the future.

Viggle currently offers a free, limited version of its AI model on Discord and its web app. The company also offers paid subscriptions to increase capacity and grants special access to certain builders. The CEO says Viggle is in talks with movie and game studios to license the technology, and has also seen it embraced by independent animators and content creators.

Capital attraction

Viggle has announced that it has raised a $19 million funding round led by Andreessen Horowitz and participation from two smaller companies. The startup says the investment will help Viggle scale, accelerate product development and expand its team. Viggle said it is working with Google Cloud and other cloud service providers to train and run AI models. These collaborations with Google Cloud often include access to GPU and TPU clusters, but usually do not include YouTube videos for training AI models.

Viggle and educational data: a legal issue

When asked about the training data for Viggle’s AI video models, Hong Chu replied, “Until now, we’ve relied on publicly available data.” This is a similar response to what OpenAI CTO Mira Moratti gave about Sora’s training data.

When asked if Viggle’s training datasets include YouTube videos, Chu answered unequivocally, “Yes.”

This can be problematic. In April, YouTube CEO Neil Mohan told Bloomberg that using YouTube videos to train a text-to-video AI generator was a clear violation of the platform’s terms of service. These comments were made about the possibility of OpenAI using YouTube videos to train Sora.

Mohan explained that Google, the owner of YouTube, may have agreements with some creators to use their videos in the training dataset for Google DeepMind’s Gemini. However, according to Mohan and YouTube’s terms of service, using YouTube videos for this purpose is prohibited without obtaining permission from the company.

This startup joins the ranks of others who use YouTube as educational data and therefore operate in a gray area. Many AI model developers (including Nvidia, Apple, and Anthropic) have been reported to use YouTube transcripts or video clips for training. Here’s the not-so-secret dirty secret in Silicon Valley: everyone probably does it. What is really rare is to say it out loud.

RCO NEWS