Artificial intelligence startup Magic recently modeled LTM-2-mini (short for long-term memory) with a background window of 100 million tokens. This incredible amount of tokens is equivalent to 10 million lines of code or several volumes of novels. Also, this startup announced the attraction of 320 million dollars; “Eric Schmidt”, the former CEO of Google, has also invested in this startup.

According to Magic, the startup’s latest model, the LTM-2-mini, has a window of 100 million tokens. Tokens can be thought of as segmented bits of raw data, and one hundred million tokens is equivalent to about 10 million lines of code, which can be considered one of the largest context windows. For example, the largest flagship models of Google Gemina have 2 million tokens.

New artificial intelligence model of Magic startup

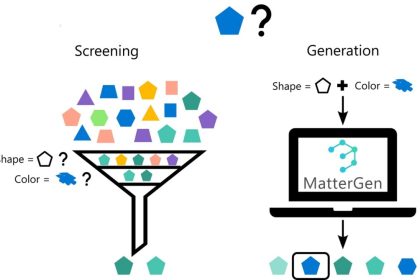

While Magic’s LTM or “long-term memory” models have many commercial applications, the startup is more focused on software development. Thanks to this large context window, Magic says, the LTM-2-mini was able to fully implement a password strength test tool or create a calculator program. The company is now training a larger version of this model.

Magic also announced that it has raised $320 million in funding, backed by Eric Schmidt and several other investors, now valuing the startup at close to half a billion dollars ($465 million). Startup Magic develops artificial intelligence-based tools designed to help software engineers write, review, and debug code. Although other companies have developed similar tools, the advantage of Magic models lies in their long field window.

Long context windows can help models avoid forgetting content and data you’ve recently shared with them.

Magic also announced the cooperation with Google Cloud to build two supercomputers that are going to be launched next year; The Magic-G4 will be powered by Nvidia H100 graphics processors, and the Magic-G5 will use the next generation of Nvidia Blackwell chips.

RCO NEWS