“Shahriar Shariati”, a programmer and researcher in the field of artificial intelligence with more than ten years of experience, has launched a project called “Parsebench”. This open source tool is designed to evaluate the performance of large language models (LLM) in tasks such as translation, reading comprehension and analysis, with a special focus on the Persian language, and its purpose is to strengthen Persian language models and develop artificial intelligence in this field. is

Getting interested and getting into programming

Shahriar Shariati became interested in programming when he was about ten years old. At first, he started his work by developing mobile and desktop applications and gradually grew in this direction. “I started when I was almost ten years old and I have been active in this field for more than ten years now,” he says. I have worked with different programming languages and started my career with mobile and desktop application development. But gradually I entered other fields such as artificial intelligence.”

Upon entering high school, he discovered his interest in artificial intelligence and pursued this interest more seriously when he began studying computer science at university. He was gradually drawn to Natural Language Processing (NLP) and started working and researching in this field since then.

Interested in natural language processing and working on language models

Shariati goes on to explain why he became interested in natural language processing (NLP) and how that interest led to his recent projects. He says: “I became interested in artificial intelligence from the beginning of high school, and gradually I became interested in NLP. “Recently, I became interested in Large Language Models (LLM) and I have been researching and working in this field for about a year.”

He explains that his work experience in this field made him more interested in the research and development of language models, and eventually he was drawn to projects such as the development of tools for measuring the performance of language models, especially for the Persian language.

Development of Pars-Bench tool and its purpose

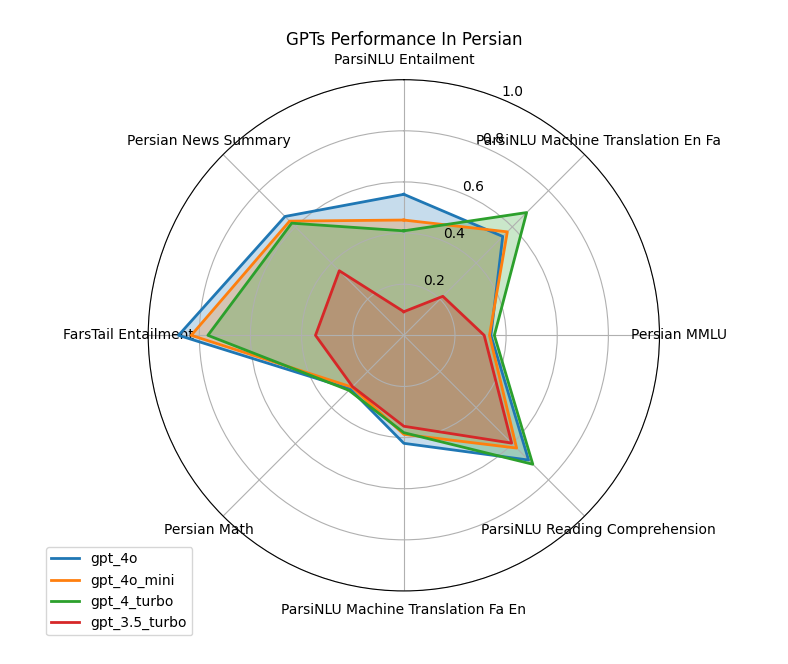

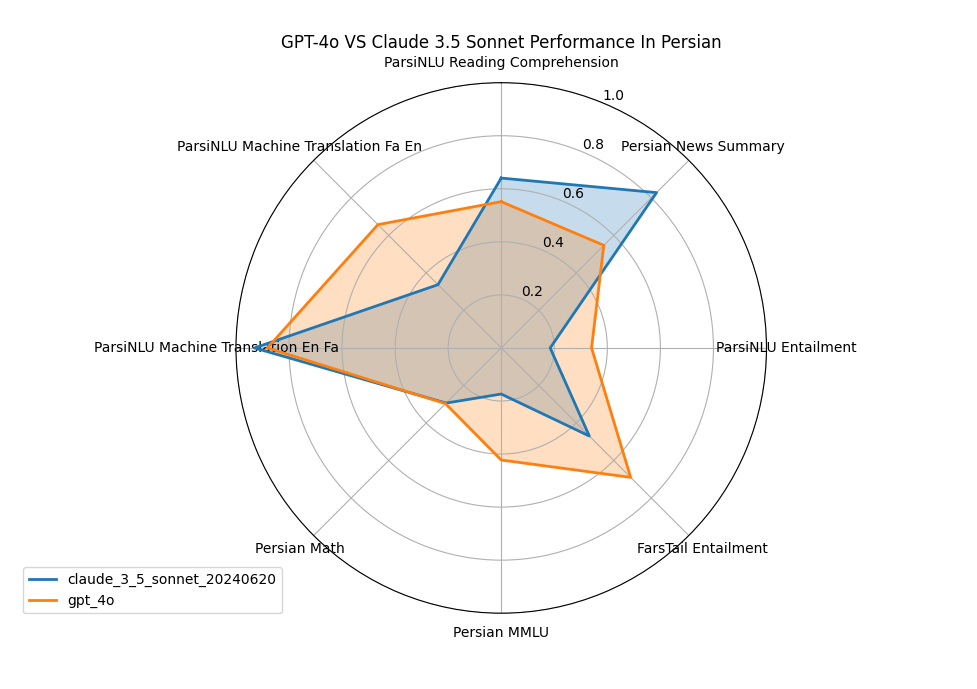

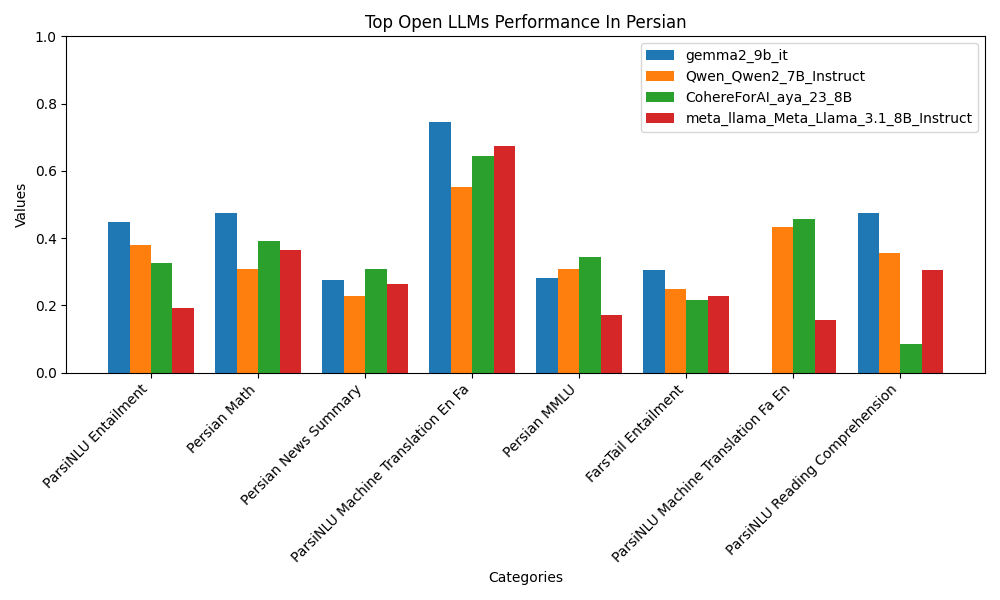

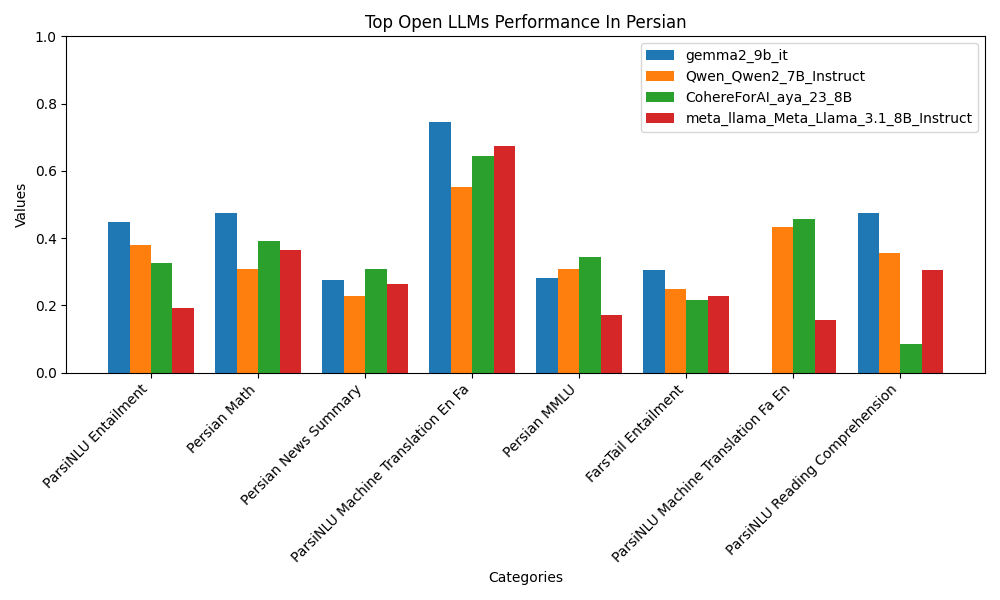

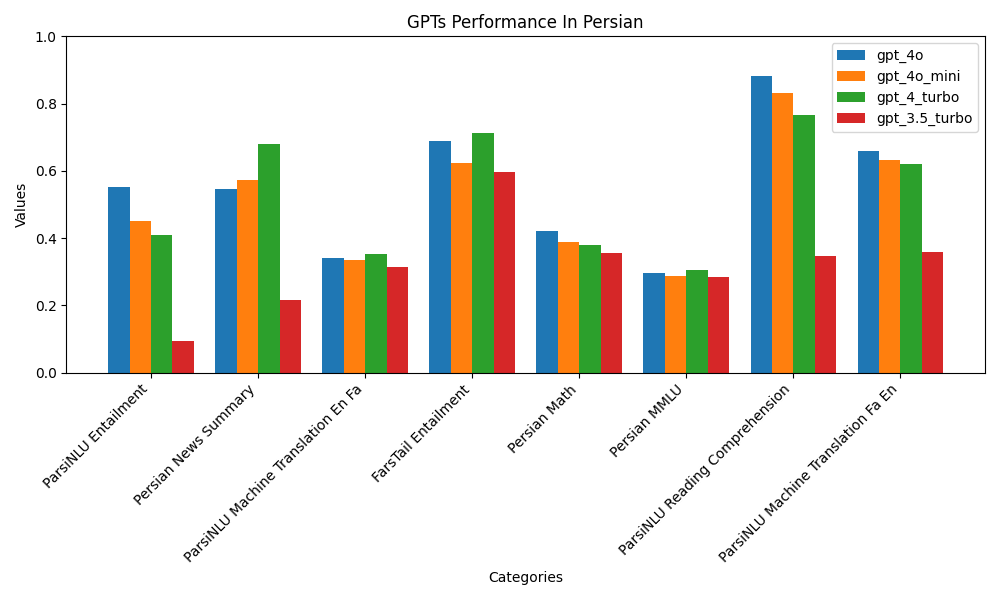

One of Shahriar Shariati’s important projects is the development of the Parsbench tool, which aims to evaluate large language models (LLM) in various tasks. He said about this: “What we did in Parsbench was to develop a tool that measures language models, especially LLMs, in different tasks. These tasks include doing math questions, machine translation, reading comprehension, reasoning and analysis.

The purpose of developing this tool is to help companies, startups and even ordinary people to choose the best language model based on their needs. Shariati says: “This tool helps people to decide which language model is most suitable for their specific work. “For example, if someone wants to develop a voice or text assistant for students, they should choose a model that performs well in high school subjects.”

Motivation to enter the field of Persian language

Shahriar Shariati explains about his motivation to focus on the Persian language in this project: “One of the problems when working with free and large language models like GPT-3 or BERT is that most of these models are optimized for the English language. When we come to other languages like Farsi, their performance may not be very good. This is what made me think of developing an accurate and reliable assessment tool for the Persian language as well.”

Technical challenges and project development path

Explaining more about the technical challenges, Shariati says: “To develop this tool, we modeled ourselves on the models and works done at the global level. For example, tools such as OpenAI’s Benchmark and Leaderboards developed for the English language. However, because these models are mainly designed for English language, we had to customize many of them for Persian language.

One of the major problems he and his team faced was the lack of suitable sources and data for the Persian language. He added: “Many of the similar works that have been done at the global level focus on the English language and other languages such as Farsi are not well covered in them. This forced us to develop appropriate data and tools for the Persian language ourselves.”

The method of evaluating models in Parsbench

He explains how the model evaluation method works in Parsbench: “We use automatic evaluation methods in Parsbench. In this way, we test different models in different tasks such as translation, reading comprehension and reasoning. For example, we give models four-choice questions and they have to choose the correct answer. Then, based on the number of correct answers, the models are evaluated and scored.”

Shariati also emphasizes that all the tools and data used in this project are available to the public in open source form so that individuals and companies can use them and evaluate their models themselves.

Marketing path and expansion of the project

Considering that the Parsbench project has been released as an open source, Shahriar explains about the marketing methods and its expansion: “In open source projects, the marketing discussion is usually not very broad, because most of the work is done voluntarily and by the community. Until now, we have introduced the project mostly through social networks and communication with prominent people in this field, and fortunately, it has been well received.”

He points out that one of the main goals of this project is to strengthen the Persian open source and research community so that companies and universities are encouraged to evaluate their language models using this tool and publish the results publicly.

Future plans and moving forward

In the end, Shahriar Shariati mentions his future plans and says: “We plan to expand Parsbench and collect better data to evaluate models in cooperation with universities and companies. We are also looking to develop more advanced tools that can evaluate the performance of models in more complex tasks.”

He also adds that he has corresponded with various universities and companies to get their support to continue developing the project. Shariati, I am currently in conversation with various universities such as Amir Kabir, Sharif and Alam and Sanat, and we hope that these collaborations will help us to develop better tools to evaluate Persian language models.

Overall, this tool is designed and released as open source to help companies and researchers evaluate language models and choose the best model for specific tasks. Shariati is looking to expand this project in collaboration with Iranian universities and companies to collect better data and develop more advanced tools.

RCO NEWS