By devising an innovative technique, Apple artificial intelligence researchers have made significant progress in deploying large language models (LLM) on the company's iPhones and other devices with limited memory.

LLM-based chatbots such as ChatGPT are very data and memory intensive and typically require a lot of memory to function, which is a challenge for memory-constrained devices such as the iPhone. To combat this problem, Apple researchers have developed a new technique that uses flash memory — the same memory where your apps and photos are stored — to store data related to the AI model.

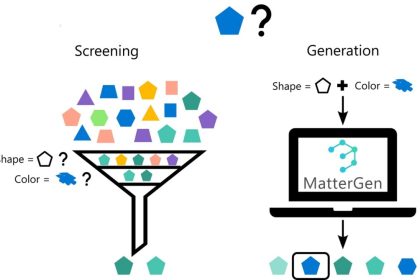

Apple's new approach to the deployment of mobile artificial intelligence

The researchers explain in their paper that in mobile phones, flash memory is more than RAM, but RAM is usually used to run large language models. In their approach, the AI model will reuse the data it has processed in the past, instead of loading new data each time. This reduces the model's ongoing need for memory and generally makes the process faster and smoother.

The Cupertinos also claim to have grouped their model data more efficiently so that it can be understood and processed faster by artificial intelligence.

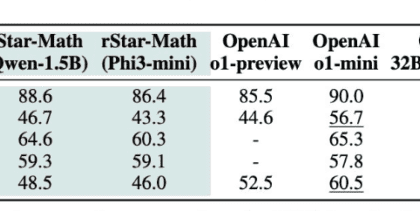

Apple has finally claimed that despite these approaches, AI models can work with twice the memory of the iPhone. Also, their performance is 4 to 5 times faster on standard processors and 25 to 20 times faster on GPUs. Apple researchers write in part of their research:

“This development is particularly important for deploying advanced LLMs in resource-constrained environments, thereby increasing their applicability and accessibility.”

It was first reported in July this year that Apple is working on developing its own AI chatbot to compete with ChatGPT, which may be called “Apple GPT”. Recently, it was also said that Apple plans to transform “Siri” with artificial intelligence, and an upgraded version of Siri's smart assistant with artificial intelligence capabilities is likely to be introduced at the WWDC 2024 event next year.

RCO NEWS