In a new study, a team of Apple researchers describe a very interesting way in which they have tried to guide a open source model that can actually learn how to create a good interface code in Swiftui. In this study, they have elaborated their work.

In an article entitled “Uicoder: Finetuning Language Models to Generate User Interface Through Automated Feedback”, the researchers explain that although large language models have been significant in recent years, including creative writing and code generation, they still have progress in continuous production that are continuously produced. It is difficult to design and optimize for the interface, they are difficult. They also know the main reason for this:

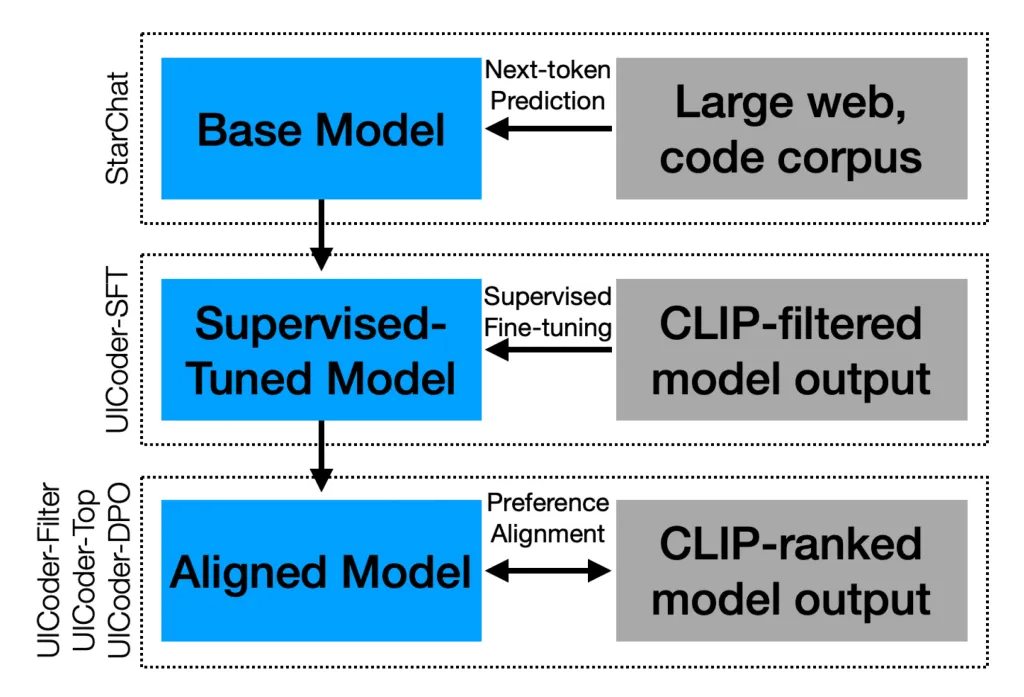

Even in the refined data set or manual, the code samples of the interface are very rare and in some cases their share is less than 2 % of the total samples in the code set. To solve this problem, they used the Starchat-Beta model, a specialized open source LLM in the production of code, as the starting point. A list of detailed descriptions of the user interface was provided to be instructed to produce a huge and artificial set of Swiftui apps based on these descriptions.

Then all the components of the code were executed using the SWIFT compiler to ensure that the codes are practically applicable. After this step, a secondary analysis was performed by Gpt-4V, which is a combination of language-bias; The model compared and evaluate the compiled interface with the initial description to determine their match. Any output that failed in the compilation process was either indispensable to the original description or was identified as a duplicate sample. What remains was a high quality set of valid codes that was finally used to accurately adjust the model and was based on its more advanced training.

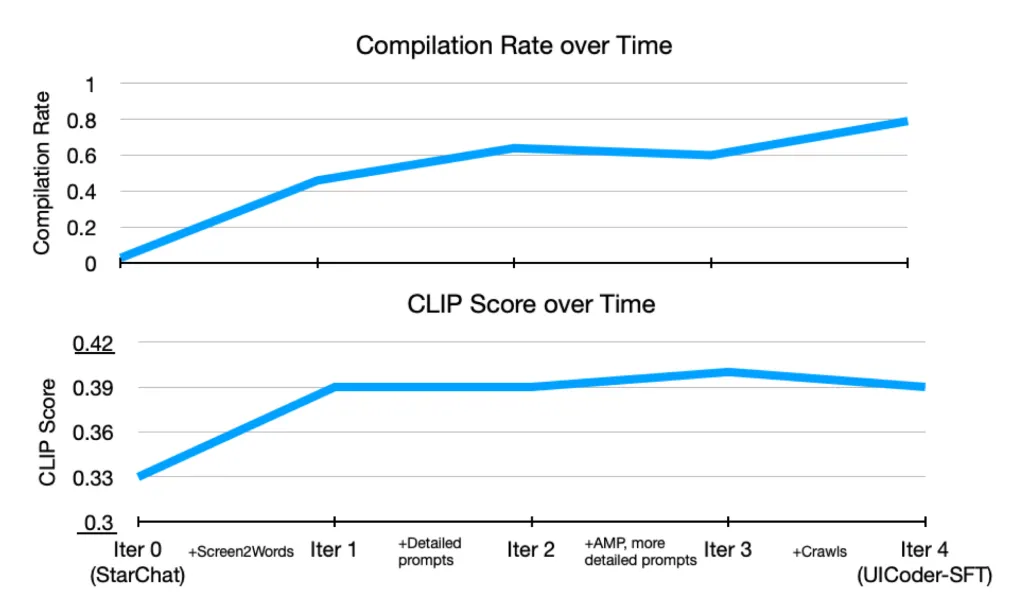

They repeated the process repeatedly and observed that in every new cycle, the improved version of the model produced higher quality Swift codes than ever before. This, in turn, led to a better and more structural data set. After completing two full steps, the research team managed to produce nearly one million SWIFTUI apps (precisely 2 samples) with a UICODER model that was able to compile the code and produce much closer user interfaces to the basic text requests than the base model.

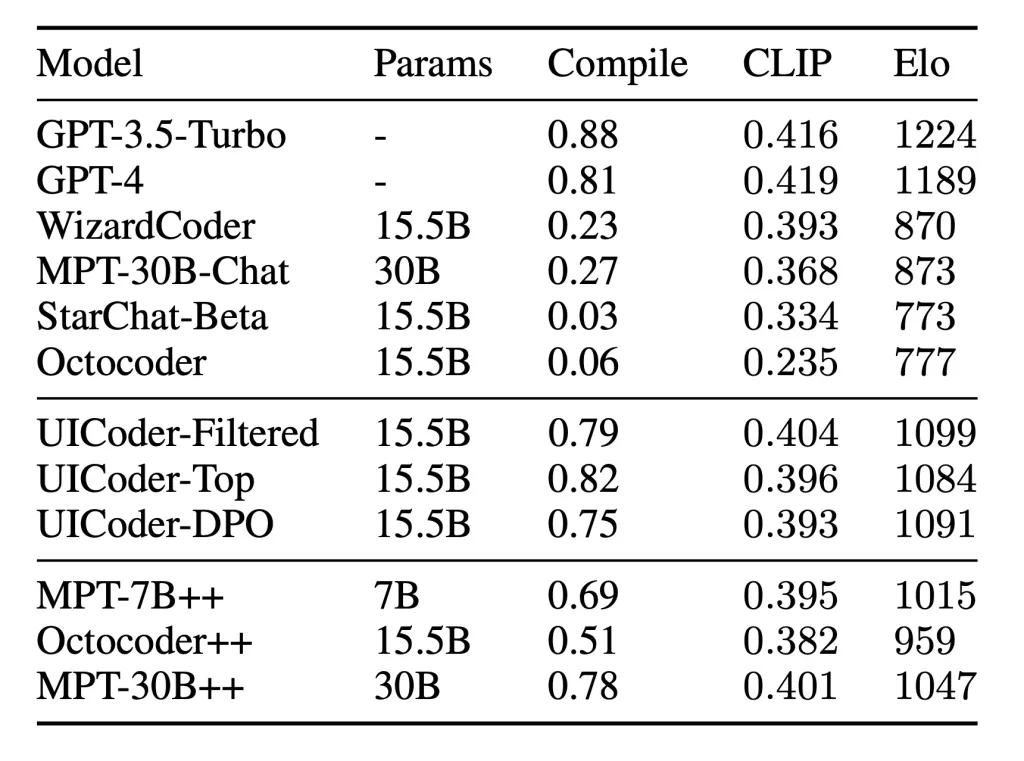

Based on the results of the tests, the UICODer showed a significant performance superior to the Starchat-Beta base model in both automatic evaluation and human measurement indicators. Also, the model was a short distance from the GPT-4, and even surpassed the compile success rate. But the interesting thing is that the initial data set was randomly lacking the Swiftui code. One of the unexpected findings of the research was due to a small error in data collection. The Starchat-Beta model was mainly trained with three main data sets:

- Thestack, a bulk database consisting of 250b token from the coding tanks with open licenses

- Crawled web pages

- OpenSASSASTANCANCO-Guanaco, a small set of instructional training

The problem began where according to Apple researchers, Starchat-Beta educational data had almost no Swiftui content. In the thestack preparation process, the SWIFT code tanks were unintentionally deleted, and in the manual review it was found that the OpenSASSASTANTANCT-Guanaco dataset had only one sample (out of ten thousand cases) containing the SWIFT code in the answer section. They believed that any SWIFT sample trained by Starchat-Beta was probably collected from web pages, which usually have lower quality and less coherent structure than the code in the formal tanks.

This shows that the UICODER progress caused by the reproduction of Swift Swifts had not been seen before, as such examples were almost unauthorized in its initial data, but the direct result of the self -producing and refined data set was that Apple had created through the auto feedback cycle. These findings have led the researchers to assume that although their method of implementing user interfaces using Swiftui, this approach is likely to extend to other languages and interface design tools, which is a remarkable point.

This research, titled Uicoder: Finetuning Language Models to Generate User Interface Code Through Automated Feedback, is published at the Arxiv database and provides full details of the process and results to researchers and developers.

RCO NEWS